DFID’s approach to value for money in programme and portfolio management

Executive summary

All UK government departments are required to achieve value for money in their use of public funds. In recent years, DFID has been working to build value for money considerations further into its management processes and its relationships with implementers and multilateral partners, establishing itself as a global champion on value for money. Against the background of the 0.7% aid spending commitment, the pledge to achieve 100 pence of value for every pound of aid spent has become central to the political case for the aid programme.

In this performance review, we explore DFID’s value for money approach, its progress on embedding value for money into its management processes and whether its efforts are in fact helping to improve value for money. In addition to new research, including visits to four country offices and desk reviews of a sample of programmes, the review draws together findings from other ICAI reviews. It has been undertaken in parallel with two reviews of DFID’s procurement practice, which is another important driver of value for money. We focus here solely on DFID, which currently spends around three quarters of UK aid. Other ICAI reviews cover the aid management practices of other departments and cross-government funds.

As value for money is both a process and an outcome and cuts across all aspects of DFID’s operations, we have not scored this review. Our judgments about performance are summarised in the final chapter, along with our recommendations for how DFID could do better.

Is DFID’s approach to value for money appropriate to the needs of the UK aid programme?

The broad principles for how UK government departments should ensure value for money are set out in HM Treasury guidelines. They include making sure there is a clear economic case for each spending programme, tracking costs and evaluating results. DFID’s Smart Rules are consistent with these principles.

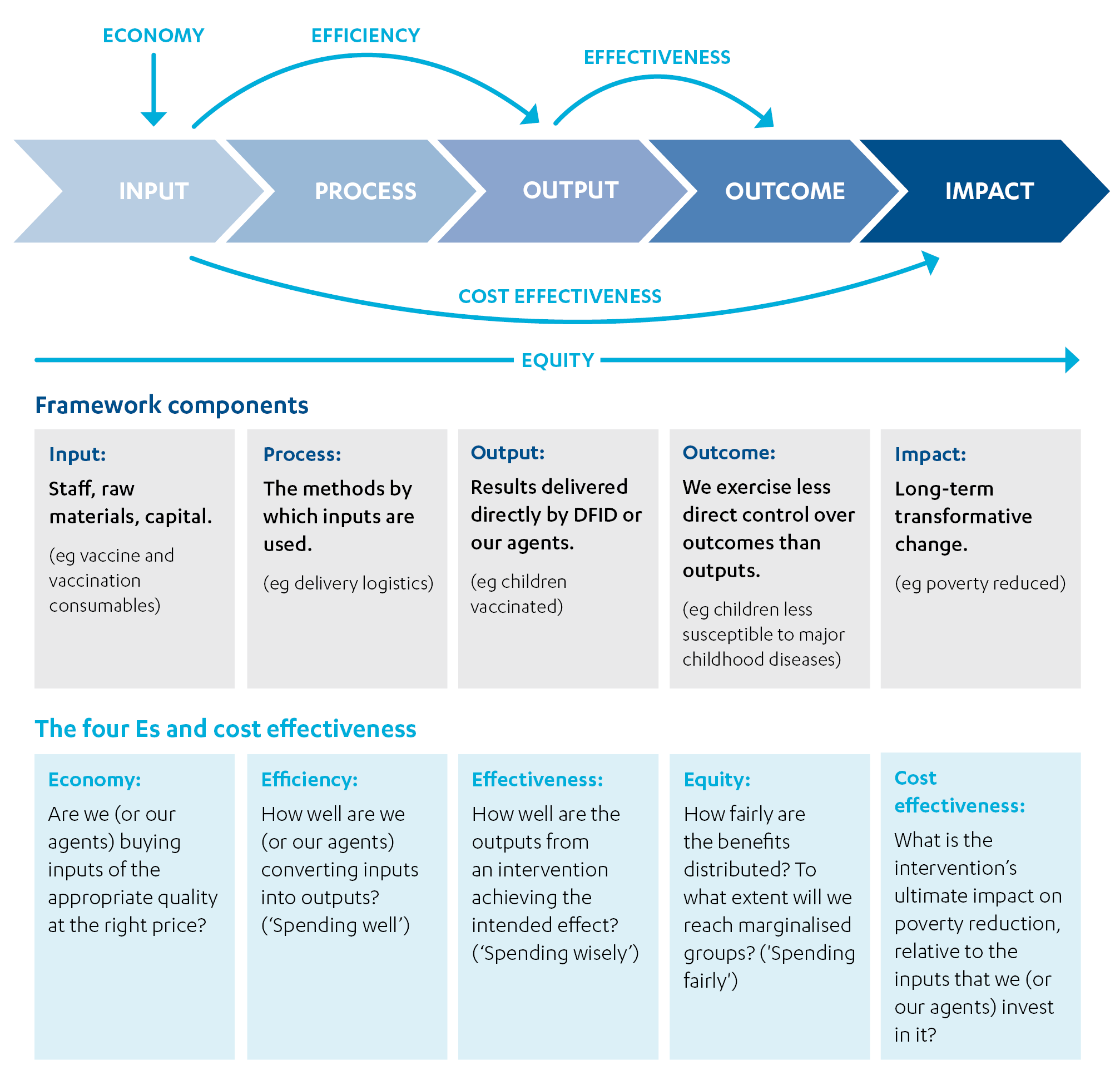

DFID uses a 3E framework – economy, efficiency and effectiveness – to track value for money through its results chain (from inputs to outputs, outcomes and impact). Increasingly, it adds equity as a fourth E, in line with its commitment to ensuring that women and marginalised groups are not left behind.

Internationally, DFID is a strong advocate for the value for money agenda, as part of the UK’s global commitment to strengthening development assistance. It encourages programme partners – whether partner governments, non-governmental organisations or commercial suppliers – to focus on value for money. It considers value for money in its funding of multilateral agencies, and has encouraged them to improve their systems for maximising results.

Overall, we find that the value for money approach has become more holistic over time, with greater emphasis on the quality as well as the quantity of results, despite measurement challenges. By incorporating equity into its value for money assessments, DFID has also acknowledged that reaching marginalised groups may entail additional effort and cost. However, DFID has yet to develop methods for assessing value for money across different target groups, to inform operational decision-making. It could also do more to take account of the sustainability of its results, giving it a longer-term perspective on value for money.

We find that DFID’s value for money approach has a strong focus on controlling costs and holding implementers to account for efficient delivery. For simpler interventions where the results are more predictable (such as vaccination programmes), this may provide sufficient assurance that overall value for money is being achieved. In more complex programmes (such as helping local communities adapt to climate change), the right combinations of interventions in each context may only become apparent over time. Achieving value for money also requires experimenting and adapting. At present, DFID’s value for money guidance does not draw this distinction. While it has taken steps in recent years to introduce adaptive programming, it is still working through the value for money implications. We have seen few examples in our sample programmes of experimentation with different combinations of outputs to see which proved most cost-effective.

DFID’s results system is not currently oriented towards measuring or reporting on long-term transformative change – that is, the contribution of UK aid to catalysing wider development processes, such as enhancing the ability of its partner countries to finance and lead their own development. DFID’s commitment to promoting structural economic change is an example of a complex objective that is not measured through the current results system. We also find that DFID’s commitments on development effectiveness – such as providing aid in ways that support local capacity, accountability and leadership – are not reflected in its value for money approach. There is a risk that the current approach leads DFID to prioritise the short-term and immediate results of its own programmes over working with and through others to achieve lasting change.

How well are value for money considerations embedded into DFID’s management processes?

Value for money has been thoroughly integrated into DFID’s programme management. Staff show a keen awareness of DFID’s commitment to value for money and their own responsibility for ensuring it. We noted a number of reforms since our 2015 review, DFID’s approach to delivering impact, that have supported this – including the introduction of senior responsible owners empowered to make decisions within a framework of guiding principles, performance expectations and accountability relationships.

Value for money is assessed in the business case for each new programme, using a mixture of quantified cost-benefit analysis and narrative justification. In most of the cases we reviewed, the appraisal was carried out diligently. However, the assumptions behind the original economic case are not routinely checked during the life of the programme. For example, the value for money case for a skills development programme may depend on assumptions about how many people trained will go on to find jobs. If these assumptions prove incorrect, the programme may need to be adjusted.

Programmes now routinely monitor key costs through the life of the programme (such as, for an education programme, the costs of new textbooks or training teachers). This information is assessed in the value for money section of annual reviews. In our sample, these assessments were of mixed quality and in some instances appeared written to justify the continuation of the programme, rather than test whether the value for money case was being achieved.

Annual review scores – which are a key control point in DFID’s programme management – relate to progress at output level, and not to achievement of outcomes or value for money as a whole. According to DFID’s own assessment, this scoring system risks creating incentives to focus on efficiency of delivery more than on progress towards end results. We are also concerned that the system creates pressures for optimistic scoring of programmes. DFID often sets ambitious targets for programmes at design, only to revise them in light of experience. Programme logframes are not meant to be static and adjustments are to be expected. However, the flexibility of targets makes it difficult to assess whether a positive annual review means good programme performance or a lack of ambition in the targets. We could also find no requirement to check that the original economic case for a programme still holds following a downgrading of targets.

DFID’s centrally managed programmes, which undertake large numbers of often small-scale activities in partner countries (for example, there are 135-140 centrally managed programmes active in Uganda alone), are often poorly coordinated with in-country programmes, presenting a potential value for money risk. Some of the more recent centrally managed programmes, however, are better coordinated since they are designed to add value to country portfolios and capacities in challenging areas such as economic development.

It is in the management of country portfolios that we find the most significant gaps in DFID’s value for money approach. In other reviews, we have welcomed improvements in DFID’s diagnostic work (the analytic work that guides DFID’s investment choices) and some promising moves towards better risk management at the country portfolio level. However, there is no system for reporting and capturing results at that level. DFID tracks the performance of its country portfolios through a Portfolio Quality Index, but this is derived from annual review scores and shares their limitations. DFID country programmes pursue a range of cross-cutting objectives, such as promoting structural change, building resilience to climate change, tackling gender inequality and supporting openness and transparency in the fight against corruption. These country-level cross-cutting objectives are important to the achievement of the Sustainable Development Goals, but do not currently feature in DFID’s approach to assessing value for money. DFID’s value for money approach should focus not just on the achievements of each individual programme, but on how they work together to deliver lasting impact.

Have DFID’s efforts led to improvements in value for money?

Across our sample of 24 programmes, programme documents recorded 29 specific instances where programme managers or implementers had found ways to improve value for money. Most of these involved cost savings, for example through better procurement. We find that DFID is diligent at pursuing incremental improvements in value for money at programme level. However, we also found few examples of efficiency measures that went beyond cost to consider options for new and better ways of doing things.

DFID has got better at identifying underperforming programmes and taking remedial action – including closing them where necessary and reallocating the resources. There are signs of a positive shift in the corporate culture towards acknowledging and learning from failure. We also saw a good range of evidence that DFID’s advocacy with multilateral partners has encouraged them to strengthen their management systems, leading to improvements in value for money.

DFID is in the process of developing sector-specific guidance on value for money in a welcome step towards a more structured approach to learning. However, our observation is that DFID staff and implementing partners are often required to develop their own solutions to complex value for money challenges, sometimes with an element of reinventing the wheel, rather than being able to draw on a bank of tried and tested methods. While we saw some instances of targeted learning support, a more systematic approach to learning, particularly across sectors and themes, could help to reduce transaction costs, promote consistency (where appropriate) and build an evidence base beyond individual programmes.

Conclusion and recommendations

Overall, we conclude that DFID has come a long way in embedding value for money into its business processes. Its diligence is improving the return on the UK investment in aid, which is most visible through incremental improvements in economy and efficiency at programme level. We also found some gaps and weaknesses, including a tendency to focus on economy and efficiency rather than emerging impact, shortcomings in the annual review process, and a lack of attention to retesting the original value for money proposition in light of implementation experience. Cross-cutting drivers of value for money, such as levels of country partner ownership and potential synergies between programmes, are not clearly identified at the portfolio level, although we welcome the increased focus on ‘leaving no one behind’. A more ambitious value for money agenda would address the larger question of how UK aid is helping to deliver transformative change in its partner countries.

We offer the following recommendations to help DFID strengthen its value for money approach further.

Recommendation 1

DFID country offices should articulate cross-cutting value for money objectives at the country portfolio level, and should report periodically on progress at that level.

Recommendation 2

Drawing on its experience with introducing adaptive programming, DFID should encourage programmes to experiment with different ways of delivering results more cost-effectively, particularly for more complex programming.

Recommendation 3

DFID should ensure that principles of development effectiveness – such as ensuring partner country leadership, building national capacity and empowering beneficiaries – are more explicit in its value for money approach. Programmes should reflect these principles in their value for money frameworks, and where appropriate incorporate qualitative indicators of progress at that level.

Recommendation 4

DFID should be more explicit about the assumptions underlying the economic case in its business cases, and ensure that these are taken into account in programme monitoring. Delivery plans should specify points in the programme cycle when the economic case should be fully reassessed. Senior responsible owners should also determine whether a reassessment is needed following material changes in the programme, results targets or context.

Recommendation 5

Annual review scores should include an assessment of whether programmes are likely to achieve their intended outcomes in a cost-effective way. DFID should consider introducing further quality assurance into the setting and adjustment of logframe targets.

Introduction

Purpose

With the UK’s commitment to spending 0.7% of gross national income on aid, the drive to achieve greater value for money has become a defining feature of UK aid. Since 2010, DFID has made a sustained effort to improve the integration of value for money concerns throughout its operations, both bilateral and multilateral.

“The government will ensure that every penny of existing ODA [official development assistance] and all new ODA spend is and remains good value for money”

UK aid strategy, 2015

“Our bargain with taxpayers is this. In return for contributing your money to help the world’s poorest people, it is our duty to spend every penny of aid effectively. My top priority will be to secure maximum value for money in aid through greater transparency, rigorous independent evaluation and an unremitting focus on results.”

Rt Hon Andrew Mitchell MP, then DFID Secretary of State, 12 May 2010.

Achieving and demonstrating value for money in development aid is a complex undertaking with major implications for the work of the department. In a 2015 report, DFID’s approach to delivering impact, ICAI recognised how DFID’s approach to value for money had helped to tighten the focus on results and increase accountability over the aid budget. It also expressed concern that the value for money tools then available in DFID emphasised the cost of inputs and the delivery of short-term results over sustainable impact and transformative change.

Since our 2015 report, DFID has introduced substantial changes in its approach to value for money. In light of the importance of the topic, we have undertaken a review of DFID’s approach to maximising value for money across its portfolios and programmes and its relationships with multilateral partners. The review draws on new evidence gathered across a wide range of programming at country and centrally managed levels, as well as value for money-related findings from past ICAI reviews. In parallel, we are also conducting two reviews of DFID’s procurement practice – another important driver of value for money.

We have chosen to focus this review on DFID, as the specialist aid department responsible for around 75% of the UK aid budget. However, in recognition that other departments play an increasingly important role in managing UK aid, we are also conducting a series of reviews on major new crossgovernment aid instruments and programmes. These include published reviews on the Prosperity Fund and the Global Challenges Research Fund, and an upcoming review on the Conflict, Stability and Security Fund. We also expect that lessons from this review will be of value to other aid-spending departments.

In this review, we assess whether DFID has an approach to value for money that supports the objectives and priorities of the UK aid programme and meets DFID’s obligations as a spending department. We assess how far DFID has progressed in embedding value for money into its management processes, including programme and portfolio management, and then turn to the question of whether the focus on value for money is in fact driving improved returns on UK aid investment. Our review questions are set out in Table 1.

Box 1: ICAI and value for money

Providing assurance to Parliament and the public about the value for money of UK aid is a central feature of ICAI’s mandate. This role is acknowledged in the UK aid strategy, which identifies ICAI as one of the mechanisms for assuring value for money across the aid programme. Value for money is a cross-cutting theme in all ICAI reviews and ICAI also undertakes reviews focusing on specific aspects of value for money.

This review covers DFID’s bilateral aid programme (including bilateral programmes contracted to multilateral agencies) across both development aid and humanitarian assistance. It also examines how DFID considers value for money in its choice of multilateral partners and influences the value for money of its multilateral aid. We do not assess DFID’s resource-allocation model or the value for money of its administrative budget.

Table 1: Our review questions

| Review criteria and questions | Sub-questions |

|---|---|

| 1. Relevance: Is DFID’s approach to value for money appropriate to the needs of the UK aid programme? | • Does DFID’s value for money framework and the design of its approach adequately support UK aid policies and priorities and the interests of intended beneficiaries? • How suited is DFID’s framework to capturing the value for money of the full range of development results? • Does DFID have an appropriate approach to promoting value for money in multilateral aid? |

| 2. Effectiveness: How well are value for money considerations embedded in DFID’s management processes? | • How effectively is value for money embedded and applied in the country portfolio and programme management cycle across the aid programme? • Does DFID effectively identify and monitor the different elements of value for money throughout the programme cycle? • Does DFID use value for money assessments to identify stronger and weaker value for money performance across portfolios of similar programmes? • Is DFID’s selection of delivery channels and partners informed by value for money considerations? • How effectively does DFID influence its multilateral partners to improve value for money? |

| 3. Effectiveness: To what extent do DFID’s value for money tools, processes and accountabilities lead to improvements in value for money? | • Does DFID succeed in improving value for money in specific programmes or across portfolios of similar activity? • Does DFID succeed in identifying underperforming programmes and portfolios and then take appropriate action? • Is DFID learning lessons which are informed by value for money processes and assessments? |

We have chosen to conduct a performance review, as our focus is on DFID’s management processes (see Box 2). On this occasion, as with ICAI’s 2015 review DFID’s approach to delivering impact, we have decided not to score DFID’s performance. As all of DFID’s management processes and programming choices influence its ability to achieve value for money, we judge that the topic is too broad for a single performance score. Our objective here is to identify areas where DFID can continue to strengthen its management processes to improve the value for money of UK aid.

Box 2: What is an ICAI performance review?

ICAI performance reviews take a rigorous look at the efficiency and effectiveness of UK aid delivery, with a strong focus on accountability. They also focus on management processes in aid-spending departments and examine whether their systems, capacities and practices are robust enough to deliver effective assistance with good value for money.

Other types of ICAI review include impact reviews, which examine results claims made for UK aid to assess their credibility and their significance for the intended beneficiaries, learning reviews, which explore how knowledge is generated in novel areas and translated into credible programming, and rapid reviews, which are short reviews examining emerging issues or areas of UK aid spending.

Methodology

The main methodological elements to this review were as follows:

- A literature review on value for money in international aid.

- A review of how value for money is incorporated into DFID’s management processes,

benchmarked against HM Treasury guidance. - Interviews with a wide range of key stakeholders, including DFID staff, representatives of other government departments, DFID’s implementing partners and external experts and stakeholders, to collect feedback on DFID’s approach to and implementation of value for money issues.

- Desk reviews of a sample of 24 DFID programmes in our case study countries and centrally managed programmes to explore how value for money processes have been applied (see Annex 1 for details of our sample).

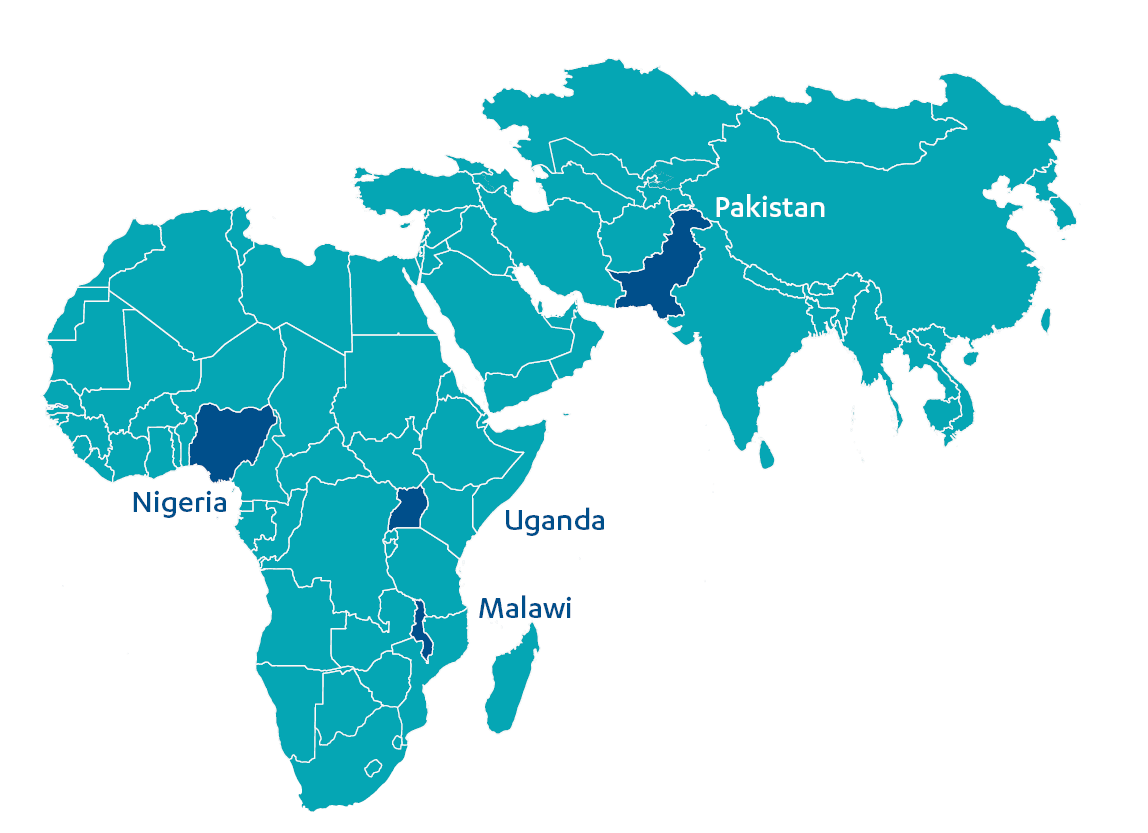

- Case studies of four countries (Malawi, Nigeria, Pakistan and Uganda), including country visits, to assess how value for money has been applied at the portfolio and programme level. We consulted with country teams about their experience with value for money processes and held numerous interviews with country counterparts, delivery partners, development partners and other stakeholders.

The methodology provided a good level of triangulated data to explore the relevance and effectiveness of DFID’s approach to value for money. Both the methodology and this report were independently peer reviewed.

Figure 1: Map of country case study locations

Box 3: Limitations of our methodology

This review explores whether DFID’s approach to value for money is succeeding in increasing the return on UK aid investment. It does not assess the underlying case for spending UK public funds on international development, which is a policy question and outside our remit. Nor does it assess DFID’s choices in allocating aid between countries, or how DFID compares in value for money terms with other UK departments or international donors.

DFID’s value for money practices are continually developing. As far as possible, we have acknowledged recent or forthcoming developments, but these may not yet be reflected in the programmes in our sample.

Background

A commitment to value for money is a key feature of the UK aid programme

All UK government departments have a long-standing obligation to achieve value for money in their use of public funds. The term has its origins in the use of economic appraisal – that is, assessing whether the benefits of publicly funded programmes outweigh the costs – to assess the merits of proposed public spending programmes. ‘Value for money audit’ is also the term used in the UK public sector for performance audits, which have been carried out by the National Audit Office since 1984. There are around 60 such reviews each year, including several of DFID.

For DFID, while the term has been in use for longer, value for money has taken on greater prominence since the government’s commitment to spending 0.7% of gross national income on international aid. It occupies a central place in the 2015 aid strategy (see Box 4 for DFID’s commitments). At its core, the value for money pledge is to ensure that every pound of development assistance maximises its contribution to improving the lives of poor people around the world.

Value for money considerations have been progressively written into DFID’s management processes. The Smart Rules for better programme delivery, adopted in 2014 following a comprehensive end-to-end review of the department’s programme management, marked a shift from a rules-based to a principles-based approach to programme management. Programme managers were given greater discretion to make decisions within an overarching set of principles, performance standards and accountability relationships. The obligation to ensure value for money is reinforced at multiple points through the Smart Rules. In a December 2014 rapid review, ICAI welcomed the shift to a principles-based approach, but called for more attention to the link between value for money in the short term and sustainability over the longer term, and more emphasis on learning and adaptation to drive improvement in impact and value for money.

DFID has also become a strong advocate for the value for money agenda internationally. It encourages its programme partners – whether commercial suppliers, multilateral agencies, non-governmental organisations or partner country governments – to adopt processes for maximising value for money. It has introduced greater consideration of value for money into its allocation of funding for multilateral agencies, and DFID has used its influence to encourage a greater focus by multilaterals on results and value for money.

Box 4: What do DFID’s policies and strategy documents say about value for money?

The 2015 UK aid strategy contains a chapter on value for money. It describes the methods for achieving value for money as cutting waste, introducing greater transparency and subjecting aid to robust independent scrutiny.

DFID’s single departmental plan notes that value for money is delivered at four operational levels:

- the strategic level, by collaborating with international partners to improve the effectiveness of all development finance

- the portfolio level, by allocating resources to maximise impact

- the programme level, through design, procurement, management and evaluation

- the administrative level, by maximising the effectiveness of people and resources.

It includes pledges to cut waste, promote higher transparency standards, give the public a greater say in aid spending, ensure independent evaluation and scrutiny, and encourage effective lesson-learning.

The Bilateral Development Review states an intention to “follow the money, the people and the outcomes” and to ensure more transparency, accountability and focus on outcomes and results. The Multilateral Development Review commits DFID to working more closely with its multilateral partners “to ensure maximum value for money for the UK’s investment”.

Among international donors, value for money is an agenda strongly associated with DFID. There has been a long-standing movement across the development field to strengthen “managing for results”, written into a succession of international agreements. It was DFID that pioneered taking this a step further, to assess whether “the level of results achieved represent good value for money against the costs incurred: moving from ‘results to returns’”. While other development agencies (such as Australia’s) have adopted goals and principles around value for money, DFID has gone the furthest in building value for money considerations into its management processes and encouraging its implementing partners to do the same. This means that there is no established body of international practice against which to measure DFID’s performance.

DFID’s value for money framework

In 2011, DFID adopted a value for money framework that is commonly used across the UK government – including by the National Audit Office in its performance audits. Often called the three Es (see Figure 2), it emphasises three dimensions of value for money:

- economy (minimising the cost of inputs)

- efficiency (achieving the best rate of conversion of inputs into outputs)

- effectiveness (achieving the best possible result for the level of investment).

In its most recent guidance, DFID suggests adding a fourth E – equity, or the extent to which aid programmes reach the poorest and most marginalised, in keeping with DFID’s commitment to ‘leaving no one behind’. The guidance notes:

“High impact does not mean a programme that reaches the largest number of people at the lowest cost. What is important is whether we reach those most in need of support and whether the support is provided in the most economical, efficient and effective way.”

Figure 2: DFID’s value for money framework

The challenges of applying value for money to international development

The application of value for money analysis to development assistance is undoubtedly challenging. In the environments in which DFID usually works, official data can be scarce, results chains are complex and uncertain, and sustainable impacts can take a long time to emerge. Aid programmes need to be adapted to different national and local contexts, which makes them difficult to compare. The contexts are also dynamic, and the scope and activities of aid programmes often need to change in response to external developments or lessons learnt.

Given these challenges, the literature points to various risks that a narrow application of value for money analysis to limited data can generate a distorted picture. Commentators have suggested that it can lead programme managers to prioritise cost reduction over maximising impact, to maximise beneficiary numbers rather than reaching the poorest, or to focus on what is readily quantifiable rather than what might deliver the most important results. Others fear that, rather than achieving a tighter focus on results, measuring value for money actually adds to the administrative burden on development practitioners, diverting them from other actions to enhance the effectiveness of aid spending. A recent Overseas Development Institute study concludes that the results agenda (which is linked to value for money) “has oriented DFID’s vision to the short-term and narrow results of projects rather than wider processes of change” and has “shifted the balance towards prioritising accountability to UK taxpayers over poor people abroad”. These challenges call for careful consideration, but are by no means insurmountable.

There is a wide range of value for money tools and approaches in the literature. Some commentators emphasise the need for sophisticated economic analysis, requiring specialist expertise, while others approach value for money as a broad conceptual framework to guide programme design, implementation and evaluation. There is no single correct approach – the appropriate solution depends on the circumstances, including the nature of the intervention and the context in which it is being implemented.

Findings

Is DFID’s approach to value for money appropriate to the needs of the UK aid programme?

In this section, we explore DFID’s approach to value for money in light of the goals and principles of the UK aid programme and international commitments such as the Sustainable Development Goals. We assess whether the approach focuses on the right issues, avoiding the pitfalls described in the literature. By “approach”, we mean both the conceptual framework that DFID uses to analyse value for money and the steps taken to embed value for money thinking across DFID’s portfolio and programme management processes.

DFID’s value for money approach follows UK government guidelines

The broad principles for how UK government departments should ensure value for money are set out in HM Treasury publications, particularly the Green Book and Managing Public Money (see Box 5). The key requirements include ensuring that:

- There is a clear economic case for each spending programme.

- Interventions are evidence-based

- Risks are managed effectively.

- There are clear lines of responsibility for managing the programme.

- Costs and results are regularly monitored.

- Results are evaluated on completion.

These requirements are stated at a general level, and only address the management of programmes, rather than portfolios (which may not be relevant to other government departments). The specific challenges of achieving value for money through development programming are not addressed in the Treasury guidance.

These principles are restated and elaborated in DFID’s Smart Rules and associated guidance. There are required steps for ensuring value for money through the programme management cycle, from design and procurement through delivery to closure. There are overarching principles about accountability and transparency and on learning and adaptation. Each programme begins with a business case that includes an appraisal of alternative options for achieving the intended objectives, with the costs and benefits clearly identified (in qualitative or narrative form, if they cannot be expressed in financial terms). The business case must set out a value for money case for the programme and how value for money will be monitored over its life. Each programme is subject to annual review, which assesses progress against targets and whether there have been changes to the cost drivers or value for money measures identified in the business case.

Overall, we find DFID’s approach to value for money consistent with UK government requirements.

Box 5: Government guidance on value for money

The Green Book sets out guidance for how to determine whether programmes represent value for money:

- Ensure that there is a clearly identified need and that a proposed intervention is likely to be worth the cost.

- Identify the desired outcomes and objectives of an intervention in order to identify options to deliver them.

- Carry out an options appraisal, reviewing different options including a ‘do minimum’ option to act as a check against more interventionist action.

- Use decision criteria and judgment to select the best option(s). Cost-benefit analysis is

recommended. - Provide an easy audit trail for the decision-maker to check calculations, supporting evidence and assumptions, with sufficient information to support later evaluation exercises. Include the results of sensitivity and scenario analyses, not just single point estimates of expected values.

Managing Public Money requires that all departments, including DFID, have robust and effective systems for internal management of their programming. These include:

- When adopting new programmes:

- actively managing risks and opportunities

- appraising alternative courses of action (following The Green Book – see above)

- basing choices on evidence, models or pilot studies where appropriat

- including mechanisms for regular stock-take at critical points of projects.

- During the lifespan of programmes:

- delegating to a senior responsible owner assigned to each significant initiative

- providing prompt, regular and meaningful management information on costs (including unit costs), efficiency, quality and performance against targets to track progress and value for money

- using proportionate (not too onerous or complex) administration and enforcement mechanisms

- using feedback from internal and external audit to improve performance

- monitoring risks regularly and adjusting programmes when necessary.

- At the end of the programme:

- evaluating outputs and outcomes, and adjusting activities based on the evaluation

- identifying and disseminating lessons learnt.

DFID’s value for money approach is beginning to include a focus on quality, but is not yet capturing sustainability

DFID’s value for money approach has always emphasised the need to take account of all forms of value, whether or not they can be measured. However, in our 2015 review, DFID’s approach to delivering impact, we noted the risk that the value for money agenda was leading to a focus on results that could be counted, rather than on meaningful impact for beneficiaries. We were particularly concerned that global results were measured mainly by counting the numbers of people reached, which gave an idea of the scale of the investment but offered a limited and in some ways distorted picture of impact.

We find that this situation is improving, with more of a focus on the quality of results, as well as the quantity. DFID reduced the number of quantitative targets in its 2015-2020 single departmental plan, in order to reduce the reporting burden on country offices. The remaining examples included immunising 76 million children and providing 60 million people with access to clean water and sanitation. At the time of our review, country offices reported against their contribution to these global targets, using standardised methodologies. Those targets are not included in the revised single departmental plan issued in December 2017. At the programme level, DFID monitors unit costs (that is, cost per beneficiary) and tries to ensure that it provides as many immunisations or water systems as it can for the level of investment.

Moving from the quantity to the quality of results can be technically challenging, but DFID is making progress in this direction. For example, in education, DFID now tracks the number of children it supports to gain “a decent education”. This shifts the focus from school enrolment rates to ensuring that children receive an adequate education and achieve basic literacy and numeracy. This is harder to measure in a reliable way. DFID country offices therefore report both on the number of children they fund through school and on measures taken to improve learning outcomes. For example, in one component of its education programme in Pakistan, DFID is working to raise the quality of lowcost private schools. Participating schools are visited regularly by monitors and rated once a year for the quality of their teaching and facilities. We have seen examples in other reviews of programmes measuring the quality of outputs, including in humanitarian aid. Looking beyond quantity to quality is fundamental to achieving value for money, and we welcome DFID’s efforts to take on the associated measurement challenges.

Measuring the sustainability of results remains a challenge. DFID’s Smart Rules state that sustainability must be addressed in the design of every programme. However, DFID’s approach to assessing value for money is constrained by the length of its programme cycle. Within three- to five-year programmes, it is usually impossible to measure whether the benefits of UK investments endure over time. In our review of DFID’s water and sanitation programming, we found that, unlike some other donors, DFID did not monitor whether results were sustained beyond the end of the programme. DFID has since encouraged its implementers to increase their focus on sustainability, but did not take up our recommendation of extending monitoring beyond the programme cycle. In our review of DFID’s use of cash transfers, we also encouraged a stronger focus on the financial sustainability of national cash transfer systems.

Equity is becoming more prominent in DFID’s value for money approach

DFID has made a strong commitment to ‘leaving no one behind’ in its programming, in keeping with a core principle of the Sustainable Development Goals. Its programmes should “prioritise the interests of the world’s most vulnerable and disadvantaged people; the poorest of the poor and those people who are most excluded and at risk of violence and discrimination”. This involves challenging social barriers and overcoming discrimination and exclusion based on gender, age, location, caste, religion, disability or sexual identity. The renewed focus on marginalisation and ‘last mile’ delivery has been an important change in the orientation of UK aid in response to the Sustainable Development Goals. ICAI is currently conducting a review of DFID’s support for people with disabilities.

The ‘leaving no one behind’ commitment has important implications for the way DFID assesses value for money. Reaching remote areas, hard-to-reach groups (such as semi-nomadic herders in Uganda) or the marginalised within society (such as people with disabilities) often involves higher costs (see Box 6). For a given budget, there are trade-offs between reaching such groups and maximising the overall number of beneficiaries.

Equity was mentioned in DFID’s original value for money framework in 2011 as a factor to consider, and is now formally incorporated into its value for money framework as the fourth E. Across our sample, we found that a substantial number of programmes now contain objectives around reaching marginalised groups. The additional costs of doing so are discussed in their value for money assessments and justified, based on need. However, DFID has yet to develop methods for comparing value for money across different target groups. New guidance on equity in value for money suggests one potentially useful approach to doing this, based on weighting different classes of beneficiary.

“One way to account for the differing value that people gain from an intervention involves carrying out analysis using distributional weights to adjust explicitly for distributional impacts in the cost-benefit analysis. Benefits accruing to households in a lower quintile [i.e. income bracket] would be weighted more heavily than those that accrue to households in higher quintiles.”

We did not come across any examples of this kind of scoring system being used, but it has the potential to support more rigorous assessment of the equity dimension of value for money. As discussed below, the importance given to targeting different groups should be decided at the country portfolio level, based on evidence and analysis. This is not currently being done.

Box 6: Bringing equity into value for money assessment

A DFID family planning programme in Uganda (Accelerating the Rise in Contraceptive Prevalence in Uganda; £30.8 million, 2011-2017) has a focus on hard-to-reach groups. The design notes that providing family planning services in remote areas of Uganda, with small and dispersed populations, costs £11 per woman reached, as compared to £7 in urban areas. These additional costs were judged acceptable because the poorest women in remote areas are likely to give birth to more children than wealthier women, and because reaching the poorest was considered a “critical success factor” for the programme. While this demonstrates a commitment to equity, more could be done to integrate value for money considerations into the design. For example, drawing on the idea in DFID’s latest guidance, different groups of women could be assigned a score (based on fertility data or other social factors), giving a basis for comparing the results of different programming options.

The value for money approach is better suited to simpler interventions

For DFID, monitoring programme results and value for money is done for two purposes: ensuring that DFID and its implementers are accountable for their use of public money, and building evidence and experience on what works. Both are equally important in ensuring value for money, but in practical terms we find that the main emphasis in DFID is on the former. This makes it better suited to interventions where the evidence on what works is clear, than to more complex interventions that require an element of trial and error.

The literature notes that different approaches to value for money may be required for simple and complex interventions. For example, the impact of vaccines on child health is clearly evidenced. A tight focus on procuring vaccines at a good price and distributing them efficiently may provide sufficient assurance that a vaccination programme is achieving value for money. In this case, ensuring that the implementers deliver the outputs efficiently is key to achieving value for money. On the other hand, a programme that seeks to help communities adapt to climate change is more complex. For such a programme, a level of experimentation may be required to determine what combination of inputs and outputs produces the best results for the investment. Monitoring the efficiency of delivering outputs, while still important, tells us little about the potential effectiveness of the programme and therefore its overall value for money.

DFID’s guidance on value for money does not distinguish between the value for money approaches and techniques needed for simple and complex programmes (or elements of them), even though the latter constitute a large proportion of DFID’s work. In practice, we find that the balance of effort is on controlling costs and ensuring efficient delivery, rather than learning what works. This perception is shared by a wide range of internal and external stakeholders.

There may be several reasons for this. Economy and efficiency are relatively easy to measure, and the data becomes available at an earlier point in the programme cycle. They are also within the direct control of the implementer, enabling DFID to hold its implementing partners to account for their performance. For complex programmes, however, there is a risk that it incentivises a focus on the successful delivery of outputs, rather than on experimentation and adaptation.

In recent years, DFID has taken steps to introduce adaptive management into its programming – namely in-programme experimentation with different approaches to see which are more effective. We have seen some good examples of adaptive programmes across our reviews, although they remain a minority. There is still a way to go, however, in incorporating adaptive principles into DFID’s value for money approach. We have seen few examples, for instance, of programmes that experiment with different combinations of activities and outputs in order to assess which are more cost-effective.

The approach gives limited emphasis to transformative results

As things currently stand, the results system is not oriented towards measuring or reporting on transformative impact – that is, the contribution of UK aid to catalysing wider development processes, including the ability of country partners to finance and deliver development interventions themselves. Transformative impacts are rarely the product of a single aid programme. They involve working with others, building capacity in partner countries and influencing other sources of development finance. DFID has set itself the ambition of achieving transformative impact in various areas, for example:

- Its Economic Development Strategy sets out the objective of mass employment creation

through structural economic change. In this area, the results that matter for value for money assessment are not just the jobs created directly by DFID programmes, but whether DFID has helped bring about the conditions for economic transformation and job creation to occur. - DFID is also committed to building resilience in its partner countries to natural disasters and the impacts of climate change. Resilience is a complex outcome of changes in many areas, such as how communities are organised, their agricultural practices and the diversity of their economies.

These types of results are hard to measure and to link robustly to DFID’s work. They are, however, fundamental to the achievement of the Sustainable Development Goals. At present, there is limited reporting of results at the level of country portfolios, which is where we would expect transformative impacts to arise. In practice, therefore, DFID’s value for money approach emphasises the direct and immediate results of individual programmes, rather than results achieved by influencing partner countries or through the cumulative or complementary effect of several interventions.

DFID’s value for money approach does not properly reflect its commitments on development effectiveness

DFID has in recent years phased out budget support and scaled back direct financial aid to partner governments. This has been driven in part by concerns about fraud and corruption. This creates a tension between two different drivers of value: the desire to control UK aid funds and prevent leakage, and the importance of aligning with national priorities and building national capacities.

In the past, these objectives were well integrated into DFID’s programming through its strong commitment to aid effectiveness principles. Though not expressed in value for money terms, the 2005 Paris Declaration on Aid Effectiveness – of which the UK was a sponsor and signatory – recognised that the practice of delivering aid through projects that bypassed national governments, while perhaps maximising the immediate return on the investment, did not help to build capacity or national leadership of the development process.49 Indeed, it recognised that too many freestanding donor projects could fragment and erode national capacity. The Paris Declaration included a commitment to greater use of “programme-based approaches”, where groups of donors pool their funding in support of a single, government-led and managed programme.

While DFID’s funding modalities have changed, principles of “development effectiveness” are still written into the Smart Rules. They include promoting country ownership of development processes, aligning with national development strategies, harmonising with the activities of other donors and providing aid in such a way that it supports and strengthens local responsibility, capacity, accountability and leadership.

We would expect to see these principles reflected more strongly in DFID’s programme designs and its value for money approach. While a few of the business cases we reviewed discussed the merits of working through country systems in their options appraisal, contributions to building sustainable national capacity – and therefore working towards eventual graduation from aid – are not usually factored into value for money assessments. We heard concerns from partner country officials and other external stakeholders that DFID’s understanding of value for money can result in prioritising short-term development results over working through country systems and building national capacities for the longer term. To balance this risk, its value for money approach should reflect more clearly and specifically its commitment to development effectiveness.

DFID has been a strong advocate of value for money among its multilateral partners

Aid through multilateral agencies represents around 60% of DFID’s budget (40% as core funding for multilateral agencies and 20% as bilateral projects implemented by multilateral partners). It is therefore a major determinant of overall value for money. Unlike for bilateral aid, DFID has only indirect influence over how it is spent, calling for a different approach to achieving value for money than for most of its bilateral spending. Its main entry point for influence is through periodic funding cycles, when decisions on DFID’s level of funding can take account of past performance and future plans on financial management and reporting. In recent years, it has used that influence to encourage its multilateral partners to adopt a tighter results focus and build value for money into their management processes.

Its assessments of multilateral capacity are set out in the 2011 Multilateral Aid Review52 and the 2016 Multilateral Development Review. Funding is assigned to individual agencies based on their strategic fit with the objectives of the UK aid programme and their organisational effectiveness. Each partner was assessed against value for money criteria, using a ‘balanced scorecard’ approach. The assessment process and the publication of the results create substantial pressure on the agencies to lift their performance in a range of management domains.

In our 2015 report How DFID works with multilateral agencies, we expressed concern that this tight focus on internal management, while addressing one important dimension of value for money, was displacing dialogue on wider strategic issues around the performance of multilateral agencies and the multilateral system as a whole. We found that, at that time, DFID had no overall strategy for engaging with the multilateral system and lacked clarity about what it expected from multilateral agencies in particular countries. Furthermore, we found that DFID’s assessments of multilateral capacity at the organisational level did not provide sufficient assurance about their performance in particular countries, and that its influencing efforts at the global level were not joined up with its engagements in-country.

DFID has made some progress on addressing these issues since then, although it remains a work in progress. It has undertaken reviews of how the multilateral system performs in two sectors (health and humanitarian aid), and is moving towards joint funding arrangements for groups of related agencies. Given that attempts to rationalise the complex multilateral system have made little headway, this is a pragmatic approach to improving value for money. We also welcome DFID’s efforts to encourage multilateral agencies to be more transparent about their expenditure (such as on travel), and to link its influencing work at the global level to its work with multilateral agencies in particular countries.

DFID has concluded performance agreements with most of the 36 multilateral agencies that receive over £1 million in core funding. In our review of the UK aid response to global health threats, we noted that such an agreement with the World Health Organization was helping to drive reforms to address deficiencies revealed during the response to the West Africa Ebola epidemic. However, not all of DFID’s multilateral partners have agreed with the approach and nor is the UK at this stage supported by other funders. DFID points out its performance-based funding is linked to results data that is already reported by its multilateral partners, so does not involve an additional burden. However, we note that there may be additional costs involved (such as independent verification of results) and risks of unintended consequences (such as encouraging multilateral partners to prioritise key performance indicators over other results areas – a risk inherent in any key performance indicator). DFID will therefore need to review in due course whether performance-based funding for multilaterals does on balance improve value for money.

Conclusions on DFID’s value for money approach

DFID’s approach to value for money is consistent with UK government guidance. The 3E or 4E framework provides a useful set of principles and parameters for thinking through value for money issues in a way that is, at least on paper, flexible and capable of incorporating different aspects of value. We found positive signs of an increased focus on the quality of outputs, as well as the quantity. We also saw substantial progress in incorporating equity and the ‘leave no one behind’ commitment into programme design and delivery.

DFID’s approach is better suited to interventions where the evidence on what works is clear and the delivery options well known. It is less suited to complex interventions where a level of experimentation is required. While DFID has taken steps in recent years to introduce adaptive management into its programming, this is not yet part of its value for money approach. DFID’s approach is not well suited to capturing transformative impacts that arise from the interaction of several programmes or by working with others. Nor does DFID’s approach reflect its commitments to development effectiveness principles.

DFID has been a strong advocate for value for money among its multilateral partners, and we welcome recent efforts to develop a more holistic approach to improving value for money across the multilateral system.

How well are value for money considerations embedded into DFID’s management processes?

In this section, we assess to what extent value for money considerations are built into the department’s management processes at portfolio and programme levels, and whether they in fact guide management decisions.

DFID has made a sustained effort to integrate value for money into its management processes

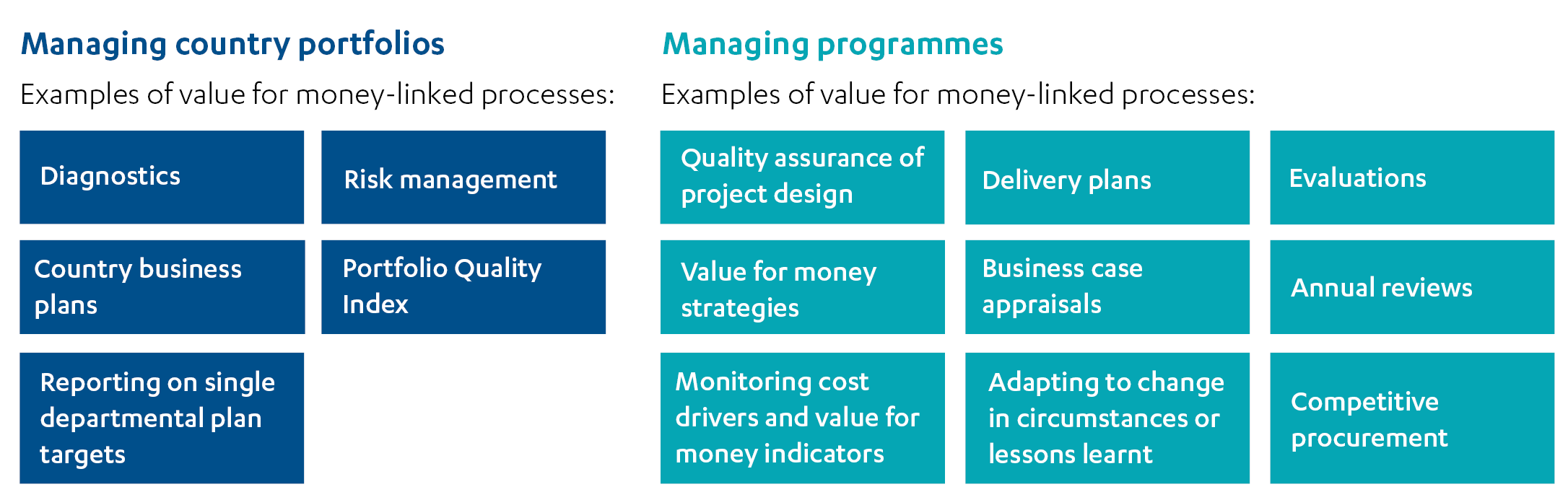

Value for money plays a prominent role in DFID’s processes. It features in the processes for allocating aid between countries, in DFID’s work with multilateral partners, and at key points through the programme management cycle for bilateral programmes (see Figure 3).

Figure 3: DFID programme and portfolio management processes addressing value for money

Box 7 summarises how value for money is addressed in DFID’s Smart Rules and associated guidance. The latest iteration of the Smart Rules added an overarching requirement that programme managers must document their efforts to improve value for money, to strengthen accountability.

Box 7: Value for money in DFID’s management processes

DFID’s Smart Rules on programme management, its Smart Guide on value for money and its guidelines on business cases and annual reviews set out a series of guiding principles, mandatory requirements and recommendations for how to address value for money through the programme management cycle. The key requirements are as follows:

- Business cases must:

- Show that the expected benefits (in monetary terms if possible, otherwise in narrative form) are greater than the costs, and better than doing nothing at all.

- Include a theory of change describing how the programme will deliver its expected results and what assumptions have been made. These assumptions may help to identify risks to be managed over the life of the programme.

- Specify how value for money will be monitored over the life of the programme and identify value for money indicators.

- Each programme must have a delivery plan, which describes how risks and value for money will be monitored.

- Annual reviews must include a section on value for money. They should:

- Consider whether there have been any significant changes that would affect the value for money assumptions set out in the business case.

- Assess whether there have been changes in costs or cost drivers, and why.

- Assess value for money performance by reference to the original value for money proposition set out in the business case.

- Reach an overall judgment as to whether the programme continues to represent value for money, and if not, identify remedial actions.

- Where a programme significantly underperforms in the annual reviews, remedial measures must be included in the delivery plan.

- There is an overarching obligation on programme managers to assess and record how their programming choices maximise impact per pound of expenditure for DFID.

- Measures to ensure sustainability of outcomes beyond the life of the programme must be considered in the business case and built into the design and delivery of the programme.

Through our interviews in DFID country offices, it was clear that the responsibility for achieving value for money was shared across the country team, rather than lying with economists or results advisors. We found that staff at all levels showed a keen awareness of DFID’s commitment to value for money and their own responsibilities for ensuring it.

Since our 2015 review, DFID’s programme management processes have continued to develop, under the guidance of DFID’s Programme Cycle Management Committee and Better Delivery Department. Reforms have included nominating senior responsible owners for each programme, to bring decision-making closer to the point of programme delivery. They are allowed greater autonomy to take decisions to maximise value for money, within a framework of guiding principles, performance expectations and accountability relationships. There have been reforms to simplify and streamline programme management processes, introduce more flexibility into programme delivery and improve real-time management information. Overall, these reforms have helped to make value for money an integral part of DFID’s programme management.

Business cases set out the value for money case, but the assumptions are not checked over the life of the programme

DFID’s business cases set out the value for money case for the investment. There are many techniques available for doing this, and the rules are flexible as to what method should be used. Across our sample, we found that business cases were mostly in line with the requirements and value for money appraisals were carried out diligently. Of the 24 programmes in our sample, 20 included a quantified cost-benefit analysis, while the others set out their value for money case in narrative form.

The Smart Rules state that the value for money performance of each programme should be reviewed periodically, by reference to the original value for money proposition set out in the business case. This is an important principle. DFID does not use benchmarks to assess value for money (that is, how does the programme compare to other, similar interventions) owing to the wide variations in the contexts where it works and the difficulty of collecting comparable data. Instead, it monitors changes in value for money indicators over the life of each programme. The lack of external comparison makes it particularly important to check that the assumptions and calculations in the business case have proved valid in light of experience. In the example in Box 8, the value for money case for a skills development programme depended on assumptions about how many young people who were trained would go on to find jobs. If this number falls below what is expected, the value for money case may no longer hold true and the programme would need to be adjusted.

This checking is not routinely done. Across our programme sample, the calculations and underlying assumptions were not always set out in sufficient detail in the business case for them to be checked, while in others, such checking would require data that was not being collected. Annual reviews include a discussion of value for money (discussed below). However, in no case in our sample did this include explicitly checking the assumptions in the original economic case. DFID could therefore tighten up the links between economic appraisals and its value for money monitoring.

Box 8: Options appraisals in DFID business cases

All DFID business cases must include a comparison of different options for achieving the intended results. Some programmes lend themselves to formal cost-benefit assessments; others can only be assessed in qualitative terms. Here is an example of each.

The Pakistan Skills Development Programme (£6.7 million, 2015-2021) aims to increase access to jobs and income-earning opportunities for poor and vulnerable people. DFID conducted a cost-benefit analysis for three options at the business case stage. The quantifiable benefits were the number of additional people trained, and increases in their employment prospects and earnings as a result of the training. The option that DFID chose had a ratio of quantifiable benefits to costs of 1.57, which was lower than one of the other options, but DFID judged that it offered other, non-quantifiable benefits that made it better value for money, including the opportunity for DFID to contribute to improvements in skills training at a national level. This sort of economic analysis is based on various assumptions. In this case DFID assumed that at least 35% of those trained would go on to find employment (a predecessor programme had achieved an employment rate of 67%). DFID conducted a sensitivity analysis which showed that the ratio of quantifiable benefits to costs could vary substantially in response to changes in this assumption. The latest annual review of the programme recommended re-running the cost-benefit analysis when adequate data on these employment rates becomes available. This is good practice, but one that we have not often seen in our reviews.

The Institutional Support to the Electoral Process in Malawi programme (£7.4 million, 2013-2017) aimed to strengthen Malawi’s ability to deliver elections that are free and credible, with electoral outcomes informed by public debate. DFID did not perform a quantitative cost-benefit analysis at the business case stage. DFID argued that monetising the benefits of elections is difficult as there are no sensible measures of the value of additional votes or electoral registrations. Instead, the business case set out the three options and discussed their costs, benefits and potential weaknesses in narrative form.

Monitoring of value for money through programme delivery is of mixed quality

Most DFID programmes (18 of the 24 in our sample, including all the more recent ones) now have a value for money framework or strategy, or are in the process of developing one. These identify key costs and value for money measures to be monitored during implementation. For example, in education programmes, the key costs may include teacher salaries, teacher training costs, the costs of textbooks and the costs of constructing a classroom, while value for money measures include the average costs of supporting a child in school for a year. These are monitored so that unexpected variations (over time or between locations) can be identified and investigated. Sometimes trigger points are specified in the value for money strategy – that is, action must be taken if a particular cost exceeds a certain level.

Encouragingly, in most of the programmes in our sample (15 of 24), we found that the approach to monitoring value for money had become more specific and detailed over the life of the programme, either by identifying additional indicators or by tracking existing indicators more systematically. We also noted a range of qualitative assessments and practical examples in annual reviews, to complement the data.

Value for money is assessed as a component of DFID annual reviews, which are mandatory for every programme. The reviewers review the data on cost drivers, whether costs are being adequately managed by programme partners and the quality of financial management. They assess progress towards both output and outcome targets. They compare value for money performance to the original value for money proposition set out in the business case.

We found the quality of these assessments to be variable. Box 9 gives an example of a good quality assessment. However, in eight of our 24 programmes, we found that value for money indicators identified in business cases were not being monitored as planned. Lack of data can result in superficial analyses, and at times assessments appear to have been written in order to make a case for proceeding, rather than to test whether value for money is really being achieved. A recent internal assessment by DFID suggested that annual review assessments are more focused on outputs than outcomes and do not pay sufficient attention to assessing the theory of change in light of experience with implementation – partly because the outputs are linked to programme scores (see the next section). It recommended changing the guidance to encourage reviewers to produce stronger data and better narratives in support of their assessments of value for money, theories of change, progress towards outcomes and equity issues.

Box 9: Good practice in value for money assessment

A good example of value for money assessment is provided by the AAWAZ Voice and Accountability Programme in Pakistan (£35.4 million, 2012-2017). The programme supports local communities and their representatives to promote more responsive local governance, with a focus on women and minority rights. The value for money section of the 2016 annual review provided a detailed analysis of programme expenditure and cost drivers (particularly salaries). It verified the existence of robust processes for procurement and operational and financial management. It described the work of the programme team to develop indicators to show the return on investment, going beyond what had been proposed in the business case. It presents the findings of a unit cost analysis, suggesting that, for every £10 spent by the project, six citizens were safer, seven had better access to public services, five expressed increased satisfaction with public services and least one had been given an opportunity to hold decision-makers to account. A sensitivity analysis suggests that delayed implementation is likely to be the biggest risk to achieving value for money. The programme was judged to be continuing to deliver significant value for money by reference to a range of evidence, including its direct engagement with 176,955 citizens (of which half were women) across 45 districts through its community forums at a cost of £15 per citizen per year. However, the analysis notes that it would be helpful to identify similar programmes to use as comparators.

Annual review scores show achievement of output targets, rather than value for money1

All DFID programmes must undergo an annual review. In each annual review, programmes are scored based on progress towards their targets. Scores range from C (“outputs substantially did not meet expectation”) to A++ (“outputs substantially exceeded expectation”). The annual review score is a key control point in DFID’s programme management. Programmes that score poorly are subject to mandatory remedial measures and may be terminated.

Annual review scores are based on outputs (what was done), rather than emerging evidence of outcomes (what was achieved as a result) or value for money (results relative to expenditure), although there is narrative analysis of all three areas. By focusing on outputs, the score provides an indication of whether implementation is proceeding as planned. However, a scoring system based on outputs can give an incomplete or even misleading picture of programme performance for more complex or less well tested programmes, or those in changeable environments. It risks focusing attention on the efficiency of delivery, rather than on whether the programme is likely to achieve the intended results.

We are also concerned that pressures on staff to proceed with implementation may work against a frank review of programme performance during annual reviews. This tension was recognised in a recent internal review of DFID’s annual review process.

Logframe targets are frequently revised, sometimes as a result of recommendations from annual reviews. In our sample, logframes had frequently been revised over the previous year. Programme teams suggested that this reflects DFID’s commitment to adaptive programming and the need to update logframes as programmes evolve. While there may be good reasons to change targets, the reasons for changes are not always well documented or explained. This raises the possibility that targets are sometimes revised in order to improve the next year’s annual review score and avoid remedial measures. The Cabinet Office shared with us their concerns about the robustness of DFID’s annual review scores for underperforming programmes, based on a statistical analysis.

There is also evidence that logframe revisions are used to correct for optimism bias in target setting at the design stage – as to both the scale of results that can be achieved and the time it takes to achieve them. A fairly consistent view among programme-level staff was that, to get programmes signed off, targets needed to be ambitious. In our sample, the targets were frequently revised after the first year. When targets were changed, we found no requirement to verify that the original economic case for the programme continued to hold.

Quality assurance over target setting is an area of concern in DFID’s programme management that has potential implications for the focus on value for money. The problem was acknowledged in a November 2017 internal review of DFID’s annual reviews.

DFID has begun to address value for money challenges associated with centrally managed programmes

DFID has a large number of programmes that are managed by central spending departments and that operate across two or more countries. They are extremely diverse, with budgets ranging from under £100,000 to hundreds of millions of pounds. They include research grants, partnerships with international organisations and non-governmental organisations, challenge funds designed to promote innovation and, increasingly, large-scale programmes designed to boost results in priority areas, such as health, education and economic development.

As we have pointed out in other reviews, DFID faces significant problems coordinating between centrally managed and in-country programming. Country offices are unable to keep up with all the centrally managed programmes. (We were informed that there were 135 to 140 operating in Uganda, mostly spending only small amounts of money.) Indeed, it is only recently that they have had access to information on amounts spent in their country by centrally managed programmes. Country offices therefore only engage with those that align most closely with their objectives.

In our case study countries, DFID staff expressed concern that centrally managed programmes may be duplicating in-country programmes or failing to coordinate with them. In Uganda, we heard of one instance where a centrally managed programme was giving away agricultural inputs such as fertiliser, potentially undermining efforts by an in-country programme to build a functioning local market in agricultural inputs. While this may be an isolated case, questions about the coherence of centrally managed and in-country programmes have been raised by country office staff across many of our reviews.

Without country office support, many centrally managed programmes find it difficult to engage meaningfully with partner governments. For example, in our 2016 review of DFID’s support for marginalised girls’ basic education, we reviewed the Girls’ Education Challenge (2012-17), a £355 million investment designed to reach up to a million marginalised girls across 18 countries. The programme was designed in part to promote innovation solutions. However, unless it worked closely with country offices, it had little prospect of persuading national education authorities to take forward successful pilots.

In 2016, DFID issued a Smart Guide setting out steps that the managers of centrally managed programmes should take to ensure they are coherent with in-country programming, such as agreeing rules of engagement and joint governance arrangements. This is a welcome step towards improving coherence, although it is not being applied retrospectively to existing centrally managed programmes.

In some areas, DFID is beginning to use centrally managed programmes to boost the capacity of country offices. In our review of DFID’s approach to inclusive growth, we noted that a new generation of centrally managed programmes was being developed to boost DFID’s capacity and scale up programming in new or technically demanding areas, such as urban development and energy.

Decisions as to where these programmes should operate had been made in consultation with country offices, and some of the programmes came with additional advisory support, which helped to address the coordination issues. The use of centrally managed programmes to supplement country portfolios and capacities is a promising new frontier for improving value for money.

Country offices are looking for opportunities to secure better value for money from multi-bi programmes

In various reviews, ICAI has pointed to value for money risks around bilateral aid projects implemented by multilateral partners (multi-bi aid), which represent around 20% of DFID’s budget. DFID often chooses to deliver through multilateral partners because of mandate issues (such as the UN’s neutrality in conflict contexts), their specialist knowledge or because of a lack of alternatives. However, it does not have the same level of influence over their work as it does for a commercial supplier. Their financial reporting sometimes lacks the detail required to enable DFID to monitor value for money.

In our review of DFID’s approach to fiduciary risk in conflict-affected states, we recommended that DFID explore ways to improve its ability to monitor fiduciary risk management by multilateral partners. Similarly concerns arise in respect of how multilateral partners ensure value for money.

In this review, we encountered a range of instances where DFID was working to drive up value for money when delivering bilateral programmes through multilateral partners. For example, in Malawi, the shift away from providing financial aid directly to government has led to DFID becoming more dependent on multilateral partners, given a limited choice of alternatives. This has lent urgency to its efforts to build up its supplier market. DFID Malawi has begun to invite multilateral partners to participate in early market engagement events for forthcoming programmes, alongside commercial suppliers, to develop a sense of competitive pressure. It has also begun to apply a ‘strategic relationship management’ approach to its most important multilateral partners, such as UNICEF, whereby a senior DFID official is given responsibility for managing the partnership across multiple programmes.

Other examples of positive measures included:

- Making more use of organisational effectiveness ratings from the Multilateral Development Review. In one humanitarian programme for refugees in Uganda, DFID informed us that the ratings had guided them on which issues to prioritise in their due diligence of multilateral partners.

- Consideration of multilateral purchasing power in options appraisal. For example, in a family planning programme in Malawi, the choice of delivery channels was influenced by the fact that the United Nations Population Fund (UNFPA) was able to purchase commodities at low prices through its global framework agreement with suppliers. This saved £900,000 on purchases.

- Efforts to negotiate down programme management fees (see Box 10).

- Working with multilateral partners to build their capacity on value for money assessments. For example, in Pakistan, DFID was working with UN agencies to support the development of value for money indicators.