How UK aid Learns

Introduction

This light-touch literature review was conducted to ensure that the ICAI rapid review How UK aid learns is informed by key thinking on how learning can take place across a government ecosystem. For efficiency, the literature review makes full use of previous learning compiled as part of ICAI reviews and should be considered an update to the literature review for How DFID learns (ICAI, 2014).

We have included a mix of literature sources to provide a multi-perspective approach. They include peer review journals, empirical studies, opinion pieces (to allow further understanding) and links to current practices.

We have concentrated on three key themes impacting how UK aid learns. While these themes are beyond the specific focus on official development assistance (ODA), we believe they are readily applicable. The themes are:

- An overview of learning in organisations.

- Potential aspects of learning in aid-spending departments.

- The need for a supportive architecture for learning.

Our aim is to clarify the key elements of good practice in organisational learning and outline the supportive architecture that provides a context for cross-government learning in ODA delivery. Given the amount and rate of change currently under way in organisational learning, and the UK ODA ecosystem, our findings provide a snapshot at this point in time.

As ICAI has observed in many of its reviews, learning is fundamental to the quality and impact of UK aid. Since 2015, the UK government has opted to involve more departments in the spending of UK aid. Around a quarter of the £14 billion annual aid budget is now spent outside DFID by 18 departments and funds. This has significantly increased the challenge of ensuring effective learning, both within and between aid-spending departments.

Overview of organisational learning

As we did in How DFID learns (ICAI, 2014), we define learning as the process of gaining and using information, knowledge and know-how to shape policies, strategies, plans, actions and reactions. There are many ways of building a learning organisation – this literature update is not specifying a particular approach.

Learning in ongoing change

Even in the most consistent and simple tasks, context and needs are always changing. This requires there to be some form of ongoing learning and adaptation – whether it is a simple exchange of data, information that is validated, contextualised and shared, or knowledge and know-how combined with experience that is synthesised to provide guidance and ways forward.

The processes of learning and adaptation during change usually require at least three phases in a cycle:

- Ensure accurate, timely and relevant input or feedback.

- Provide an opportunity to reflect, challenge, assimilate or accommodate.

- Then choose, adapt and or implement timely and appropriate change and start the cycle again.

These are based on aspects of a number of different models of learning during change. For example, Argyris’ (1991) double loop learning where, among other things, individuals are encouraged to reflect on how they define the problem and their associated beliefs before acting. Another approach is reflective learning that recognises the need to engage with feedback, reflect on it and then act on it, thus completing the learning cycle (Quinton and Smallbone, 2010). We assume that learning, adaptation and ongoing change is taking place in an interdependent and uncertain environment.

An example that reflects the Quinton and Smallbone learning cycle can be found in a case study by the National Audit Office (NAO) on Supporting learning across local government: the Beacon Scheme, described in its report Helping Governments Learn (NAO, 2009: 34-5). The study identifies two key lessons:

- acquiring the right knowledge is a strategic task and rarely falls into an organisation’s lap. There is value in reflecting on where knowledge gaps exist and how these can be filled.

- learning from others works best when it is adapted to local conditions.

The NAO study quotes a senior manager at Gloucester Council, who notes that:

“we got smarter about the sharing of best practice and realised we had to target those areas of the city where we would get the best return. This led us to learn a lot about different types and cycles of collection from people who had piloted them.” (NAO, 2009: 34)

Six elements of learning

Before the changes in ODA delivery due to the 2015 aid strategy, ICAI completed the How DFID learns review (ICAI, 2014). This described six different elements which still effectively address the various aspects of learning that should be considered and are worth restating:

- Clarity – helping to focus the organisation’s learning while emphasising the importance of a shared vision of learning. The shared vision can only really be relevant to those involved if it reflects their own personal interests (Senge, 2006). This creates a joint problem space which comprises an emergent, socially negotiated set of knowledge elements such as goals, problem state descriptions and problem-solving actions (Roschelle and Teasley, 1995).

- Connectivity – seeing the organisation as a learning community. Individuals learn as participants in and contributors to the organisation and an organisation learns through its members and as part of its marketplace and society (Kira and Frieling, 2005). It is essential to know how individuals relate to their organisations and networks, the interplay between the constantly evolving informal networks and more persistent formal structures (Capra, 2002; Ibarra et al., 2005). The question is not when social networks will form but when will they be used to transmit information about local innovations that may benefit the firm (Erikson and Samila, 2012).

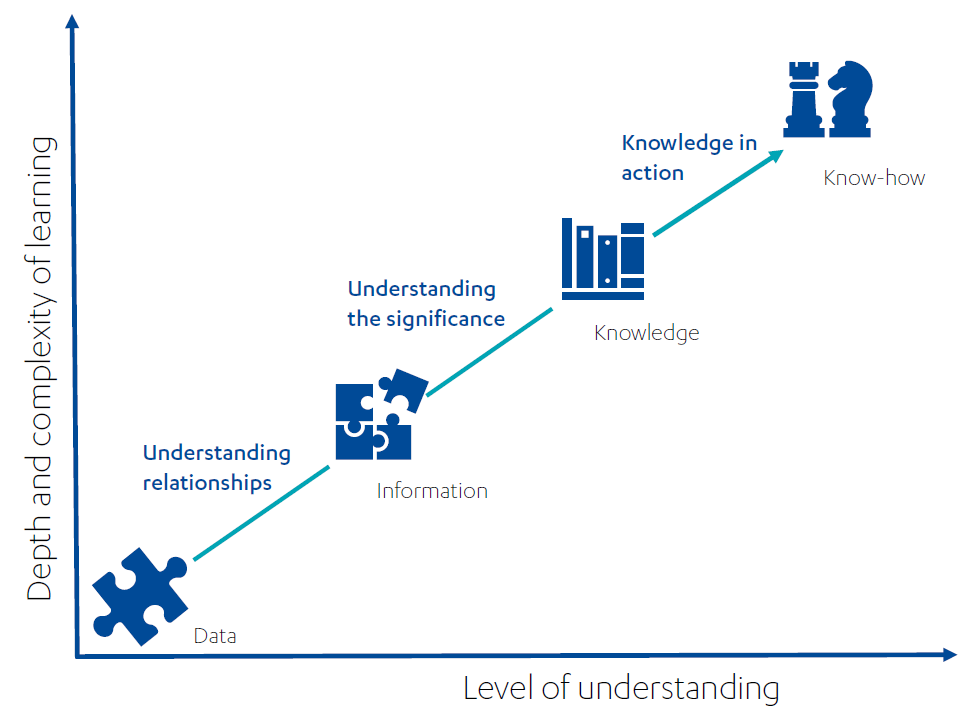

- Creation – developing knowledge and know-how and understanding the important distinctions between data (an ordered sequence of given items/events), information (a context-based arrangement of items whereby interrelationships are shown), knowledge (the judgment of the significance of events and items which comes from a particular context/theory) (Tsoukas and Vladimirou, 2001), and know-how (focusing on the activity) (Cook and Brown, 1999: 388). These key aspects of learning are illustrated in the following diagram:

Figure 1: Different aspects of learning

Source: adapted from Bellinger et al. (2004: 3).

- Capture – embedding information, knowledge and know-how. Using Piaget’s terms of assimilation and accommodation to understand how information, knowledge and know-how are embedded (Ackermann, 1996). When people assimilate new information they are using their existing beliefs and structures to make meaning. They impose their order, beliefs and knowledge onto things. In accommodation, people adjust their internal beliefs, ideas and attitudes. They become one with the object of attention which may lead to momentary loss of control, since this loosens boundaries, but allows for change (Ackermann, 1996). This is also based on the assumption that learning is the process whereby knowledge and know-how is created through the transformation of experience (Kolb, 1984). It is important to also note that social structures and interactions play a significant role in learning. This requires a “convergence in educational practices of followers of Piaget and Vygotsky” (DeVries, 2000: 187).

- Communication – sharing information, knowledge and knowing. It is important that the organisation is able to develop, capture, accumulate, store, retrieve, share and apply knowledge and information. The more formal the sharing process, the more expensive it is to maintain (Devane and Wilson, 2009). Also, “in every human organization there is a tension between its designed structures, which embody relationships of power, and its emergent structures, which represent the organization’s aliveness and creativity” (Capra, 2002: 106).

- Challenge – information and knowledge is validated and contextualised based on targets and benchmarks – challenging or confirming existing findings and analysis. Ultimately, information, knowledge and knowing in the organisation must support improved value for money and impact of aid programmes.

Potential aspects of learning in aid-spending department

In How DFID learns, (ICAI, 2014: 1) wrote that “excellent learning is essential for UK aid to achieve maximum impact and value for money”. Learning is basic to the ability of any organisation to achieve its objectives – particularly in times of change and uncertainty when it needs to learn and adapt in real time (Steel, 2016). It is ICAI’s experience that continuous learning is essential to the achievement of impact and value for money. The quality of learning is therefore an area that we explore in many reviews.

Building on the How DFID learns review, this literature update looks at aspects of learning that can impact aid-spending departments and cross-government funds. It also incorporates findings from a number of other ICAI reviews that looked at learning in non-DFID funds and programmes.

In these previous ICAI reviews, we have found that learning processes are often not well integrated into departments’ systems for managing their aid. Learning is frequently treated as a stand-alone exercise or a discrete set of products produced to a predetermined timetable.

The challenge is to ensure that the resulting information, knowledge and know-how is actively used to inform management decisions in real time. This involves building a culture of evidence-based decision making and a willingness to embrace failure as well as success as a source of learning. The ultimate goal of learning systems is not to meet an external good practice standard, but to make departments more agile and effective in the management of their aid portfolios.

An example is the World Bank’s Agile Bank Programme:

“[This was] launched in late 2016 to improve ways of working and promote a culture of continuous improvement through a staff-driven approach. Its goal is to enhance client value through more efficient resource allocation and empowered staff.

The Agile approach is an iterative process to make continuous improvement through incremental gains. New ideas are incubated, tested, rolled out if they work, and discarded if they do not. In fiscal 2018, a community of 200 Agile Champions – from across all operational units – was established to accelerate the testing and mainstreaming of the Agile approach.” (World Bank, 2018: 70)

Learning in formal structures and informal networks

Learning guided from the top of an organisation is important, but it also needs to be embedded and owned at all levels. Corporate objectives and commitments written around learning, depending on how they are gleaned and applied/passed on, do not automatically ensure that learning is actually happening on the front-line. This is important as:

“your front line troops are boundary spanners, that is they have a foot in your organization and a foot in the turbulent, changing, demanding world of your customers, suppliers and competitors. Too often, executives spend the vast majority of their time locked away in internal meetings.” (Moore, 2011)

There is a constant interplay between informal connections and networks (some would call it the positive grapevine) (Wells and Spinks, 1994) and the more formal structures designed and implemented to work with and capture data, information and knowledge. Informal networks are usually developed to try to learn from each other and share insights – often resulting in spontaneous learning (ICAI, 2014).

The World Bank is taking a particular interest in workplace networks and broader social networks, noting that they can be a powerful stimulus to learning. However, it has found that in the past structural constraints on cross-support and budget constraints on communities of practice tended to hamper connectivity (IEG, 2014).

There are clear learning advantages to both the formal and informal, so the real opportunity is working along the continuum between them. For example, Howard Schultz, Starbucks’ former CEO and chairman, has built and fostered the informal organisation, but also recognises that the formal element plays a key role. He said: “You can’t grow if you’re driven only by process, or only by the creative spirit. You’ve got to achieve a fragile balance between the two sides of the corporate brain.” (Muoio, 1998)

Individual and organisational learning

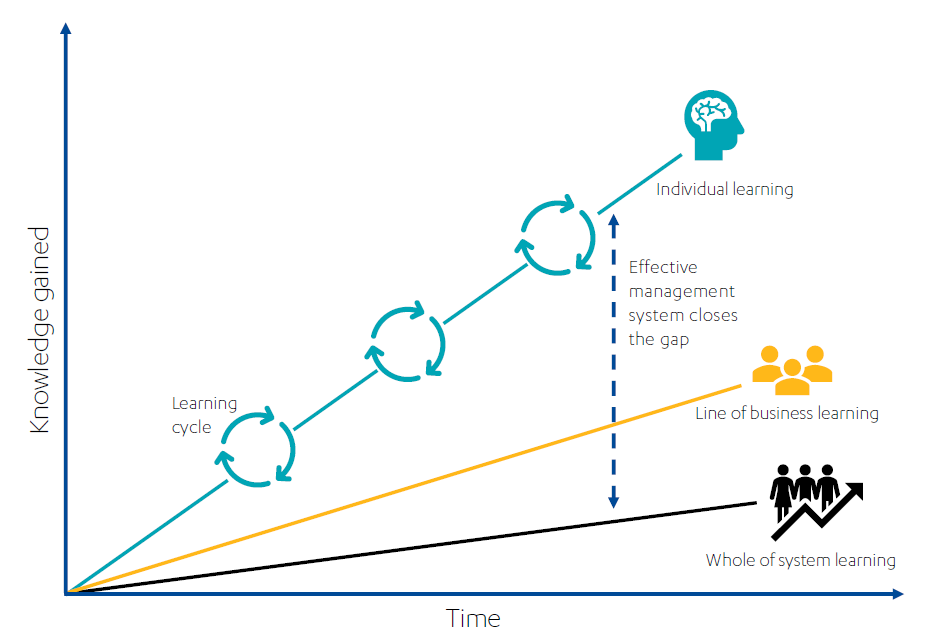

It is individuals that determine whether learning happens or not: learning does not necessarily take place because corporate systems are in place. In reality, people often learn despite deficits in those structures by gaining knowledge through their networks and individual learning cycles. Steel (2016) notes in a blog piece that organisations, on the whole, need to have a more systematic approach, “leveraging information and knowledge across the system into an ever-improving way of doing things”. Since individuals in an organisation do move on, potentially causing corporate amnesia, more formal, systematic learning systems are needed. The diagram below (adapted from Steel, 2016) shows this dynamic between individual and organisational learning:

Systems in themselves will not suffice, however – they have to be designed around actual and understood need. Individuals need to see the benefit of using them and breathe life into them (Hamlin, 2012: 221 and 228). What are the principles that need to be instilled around a desire and passion to learn and share, to make things accessible and share what is learned?

Individuals can exemplify those behaviours of sharing across the whole organisation, but how do you get those isolated examples to spread? When will they be used to transmit information about local innovations that may benefit the firm? (Erikson and Samila, 2012)

Figure 2: Closing the gap between individual and organisational learning

Learning as experience

The staff surveys reviewed in How DFID learns highlighted an interesting dichotomy. The surveys showed that people generally seem to believe that most learning comes from formal training (ICAI, 2014: Annex 4). As a result they do not consciously see their experience as learning. Yet, when speaking about working with beneficiaries of aid, people will often talk about the transformational effect their experience on the ground has had on them. Nothing can replace people actually learning on the front-line and sharing that learning with peers inside and outside the organisation.

In acknowledgement of this, the UK civil service has adopted a 70:20:10 model for improving the capability of individuals: 70% of a person’s learning takes place through experience, 20% through others (such as mentors or peers) and 10% from formal learning. The model, together with information on the informal learning opportunities for individuals and teams that exist in the civil service workplace, can be found in Your guide to learning in the workplace (Civil Service Learning, 2015).

In a learning organisation there is a need to continue to encourage a culture of free and full communication about what does and does not work. Staff should be encouraged always to base their decisions on experience and evidence, without any bias to the positive. They, and beneficiaries, need to be able to share their know-how and experience to help themselves and their organisations to learn, adapt and change.

As noted in the International Journal of Nursing Sciences, “The clinical experience is a crucial aspect of learning nursing practice which enables students to connect theoretical and conceptual knowledge” (Phillips et al., 2017: 205). Similarly, another study found that “The early practical experiences gave students opportunities to apply and integrate their previous knowledge, develop interpersonal skills and appreciate the value of patient-centred care.” (AlHaqwi and Taha, 2015)

Also, an interesting perspective on the importance of learning through experience is highlighted by the AMN Healthcare Education Services. In a blog piece, AMN’s clinical content director notes that:

“Fundamentally, clinical experiences provide an opportunity for nurses – pre-licensure and active license – to expand their skills and knowledge to practice safe patient care. Clinical experiences are important throughout a nurse’s career – student or experienced – because they provide a roadmap to patient care decisions and professional development. Without this, nurses are unable to function in an autonomous role as patient advocates, as well as contribute to global healthcare initiatives.” (Baclig, 2015)

Learning and emergence

So how do you formalise learning without stifling an individual’s experiences or an organisation’s learning processes? How can informal social networks be supported in the process of continuous learning while accessing patterns and insights? How do you encourage constant evaluation throughout an ODA spending initiative so that appropriate adjustments can be made to improve the work, or quickly pick up on potential dead ends and ineffective practices?

One element that is key to addressing these questions is to approach aspects of learning as an emergent process. This is critical in the volatile, uncertain, complex and ambiguous social and physical environment we find ourselves in. As noted by Downs et al. (2003: 3):

“Complex systems are incompressible, i.e., it is impossible to have a complete account of a complex system that is less complex than the system itself. Therefore, given that uncertainty is not a result of ignorance or the partiality of human knowledge but is a characteristic of the world itself, strategies designed to reduce or eliminate uncertainty are likely to be ineffective at best and may very possibly be a risk to organisational survival.”

In order to work with uncertainty, an article in the Ivey Business Journal (Moore, 2011) suggests that instead of a deliberate, linear approach, an emergent approach would allow a strategy to emerge over time as intentions collide with, and accommodate, a changing reality. As Downs et al. (2003: 1) suggest:

“The emergent approach to strategy formulation has been characterized by trial, experimentation, and discussion; that is, by a series of experimental approaches rather than a final objective. Emergent strategy is undertaken by an organization that analyzes its environment constantly and implements its strategy simultaneously.”

Learning in complexity and constant change

Amidst all the unknowns we are facing in ODA, one certainty is that we need an aid delivery capability that can work with major change effectively. In his NAO blog The glue to managing change, Steel (2016) comments on the need for the civil service to be able to manage major change effectively – not only new change but all the existing transformation of public services.

Steel (2016) suggests that government departments are very good at responding to crises, for example a situation in a fragile country, amassing teams and getting people to where they need to be. However:

“the scale of new changes, on top of the existing transformation of the way public services are being delivered – including complex contracts and delivery chains, extensive devolution and new technologies – will make it extremely challenging to manage the interdependencies, unintended consequences and unexpected consumer reactions.”

Steel’s blog article points out three keys to managing change:

- Using information to improve: “it’s not ok to make decisions in an ill-informed, out-of-sight out-of-mind way”.

- Having an end-to-end perspective: “a better shared understanding of how entire end-to-end systems work, whether they are performed by other departments, local government, third parties or users of services”.

- Learning: “organisations and government as a whole need to develop a culture of learning from mistakes and successes”.

Major change can also bring with it increased complexity and ambiguity. Thiry (2011), whose paper focuses on ambiguity in project management, notes that ambiguity is linked to complexity – the more choices available, the higher possibility of ambiguity. This makes it problematic for project managers who are traditionally asked to make rational decisions focused on performance and results. Thiry maintains that increasing ambiguity requires intuitive decision making and a focus on learning (sensemaking). This takes us beyond linear cause/effect approaches to change and introduces the possibility of a more dynamic and holistic awareness.

Continuous learning

A conclusion of the How DFID learns (ICAI, 2014) review was the need to concentrate on learning within the delivery management cycle rather than at the beginning and end only. Adaptive programming is particularly appropriate for unstable environments, since it starts from the assumption that not everything can be known at project outset (Derbyshire and Donovan, 2016).

It is important to foster the ability to learn and adapt quickly, especially when there is potential for a research-practitioner split; a difference between academic theories and on-the-ground reality. Day-to-day reflexivity and learning must be built into a culture where staff are given time to reflect on what they are learning (ICAI, 2014: 24).

The World Bank also suggests in its guidelines that during project implementation:

“task teams are expected to continuously monitor the current relevance of the project objectives and the likelihood of achieving them, and when needed should take action to restructure the project” (World Bank, 2006: 43). More specifically, infoDev aims to “ensure that monitoring and evaluation techniques feed into short feedback loops that enable infoDev and its stakeholders to continuously build, measure, and learn” (infoDev, 2014: 60).

Adaptive ways of working

There has been increased interest within the international development community in the concept of ‘doing development differently’ and adaptive ways of working (Tulloch, 2015: 2). This has coincided with debates on how to achieve the Sustainable Development Goals and the shift of approach that will be needed to succeed in a complex, interdependent and fast-changing environment.

Adaptive programming

“There’s a lot of discussion within international development at the moment around the idea of ‘adaptive programming’. This is in response to a long held belief and growing evidence that development is complex, context-specific, non-linear and political, and that conventional development interventions too often fail to take this into account.” (Lloyd and Schatz, 2016)

Adaptive programming responds to several key understandings about development and learning: development players may not be able to fully understand the circumstances on the ground until engaged, these circumstances often change in rapid, complex and unpredictable ways requiring ongoing learning, and the complexity of development processes means they rarely know at the outset how to achieve a given development outcome – even if there is agreement on the outcome.

As O’Donnell suggests:

“because you cannot predict in advance exactly how your intervention will play out in addressing a complex problem or how the context will change, adaptive management requires that such analysis is ongoing, not once-off. Systems keep changing around you, therefore you need to ‘act, sense and respond’ iteratively.” (O’Donnell, 2016: 8)

At a minimum, development players should learn and respond to changes in the political and socio-economic operating environment. More substantially, a programme may recognise from the outset that change is inevitable, and build in ways to draw on new learning to support adaptations (Valters et al., 2016: 5).

For example, Ramalingam et al. (2019: 3) envisage that adaptive programming principles can be employed at a variety of stages of a programme cycle. They include:

- at the early design stage when they can be used as a guide to action.

- at key reflection points, to consider how to improve the existing monitoring, evaluation and learning (MEL) system and ensure continual iterative monitoring and learning, and to measure whether the theory of change or causal assumptions/mechanisms are as expected or whether adjustments are needed.

- at the outcome and impact evaluation stage, to assess how well an adaptive programme performed, including whether and how MEL data and systems supported adaptive management ambitions in practice.

At this point it is important to look at Derbyshire and Donovan’s (2016) sobering report Adaptive Programming in Practice, which sets out the shared lessons from the DFID-funded LASER and SAVI programmes. These lessons indicate that adaptive programming is effective in achieving institutional reform results in complex environments. The challenge, however, is that designing, contracting and implementing programmes which work in adaptive ways is time-consuming and difficult and often involves swimming against the tide of conventional and well-embedded practice. This is resonant with Peter Vowles’ blog on Adaptive Development (2016).

Derbyshire and Donovan’s (2016) findings suggest that strong technical leadership is needed for a programme to adapt appropriately in the light of ongoing discovery – understanding the problems and the solutions necessary to address them. Adaptive programmes also require staff willing and able to facilitate and provide support behind the scenes, as well as systems to build staff and partners’ confidence and skills to work in an adaptive manner.

Systems are also needed to encourage ongoing learning and reflection informed by robust evidence and to scale activities up and down in response. However, many of the systems in place have been established to ensure accountability and compliance. These systems rely on pinning down details of work plans, budgets and personnel inputs up front and delivering against these. This approach inherently closes down space and flexibility required for adaptive planning.

According to O’Donnell (2016: 12) many still depend on a more linear approach. He believes that if adaptive management is to become more mainstream the following challenges will need to be addressed:

- is there enough evidence to show that addressing complex problems with adaptive approaches is more effective than with more linear approaches?

- how can pressures to demonstrate the sorts of tangible, attributable results which are expected by many funders, civil society organisation leaders and the general public in donor countries be reconciled with the messy reality of adaptive management of complex problems?

- if the ability to predict the results of interventions is accepted as being low, how can those intervening best be held accountable for responsible spending of funds? How can ‘well-managed failure’ be distinguished from ‘poorly-managed failure’?

- what does value for money look like in adaptive programmes?

- can the attitudes of curiosity, reflection, risk-taking and acceptance of uncertainty needed for adaptive management be fostered sufficiently to scale it up?

We would also add that adaptive ways of working depend on effective individuals and teams where relationships are based on trust and there is a strong commitment to learning and reform.

“By working together in close proximity over an extended period, they develop a rhythm, rapport, common identity, and trust that vastly improves their ability to build on each other’s ideas and solve business and technical problems.” (McDermott, 1999: 2)

Software development – agile versus waterfall

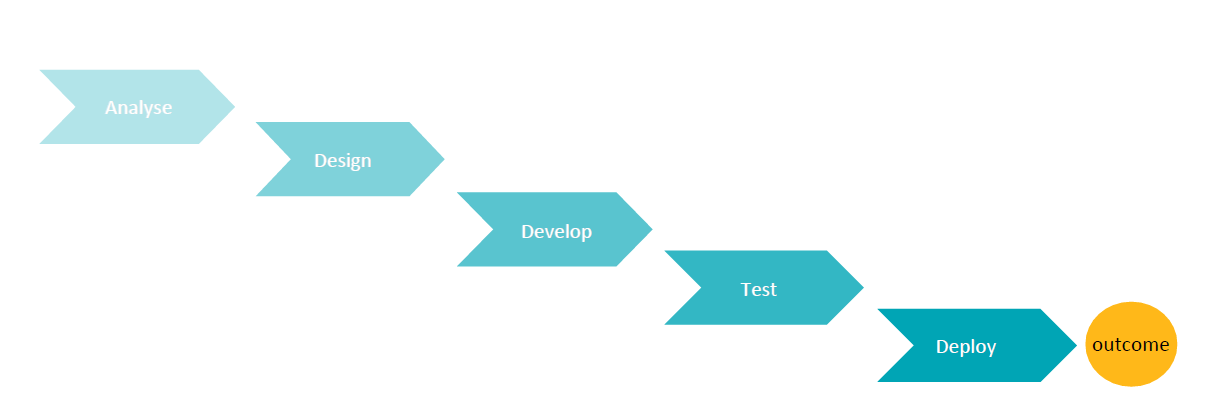

At this point it is useful to look at how software development in IT has evolved. While the language is somewhat different, there are interesting links between the waterfall and agile methodologies in software development and aid delivery processes.

The waterfall model is a sequentially structured approach where the development team goes ahead to the next stage of development only after the previous stage is fully accomplished.

“Software development companies, adopting this model, spend a considerable amount of time in each stage of development, till all doubts are cleared and all requirements are met. The belief that drives this kind of software development model is that considerable time spent in initial design effort corrects bugs in advance. Once the design stage is over, it’s implemented exactly in the coding stage, with no changes later. Often the analysis, design and coding teams are separated and work on small parts in the whole developmental process.” (McCormick, 2012)

Previously government, health and welfare IT projects usually took this waterfall approach, first introduced in the 1970s by Winston Royce (see, for example, Royce, 1987), often with huge wastage and sometimes becoming obsolete by the end of the implementation.

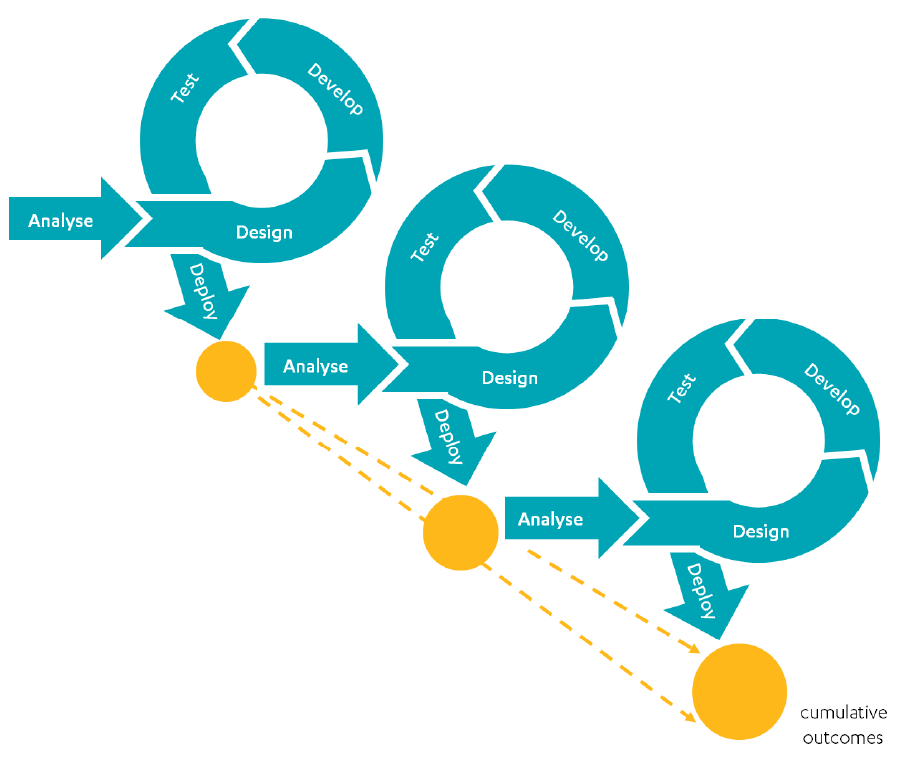

Compared to the set-in-stone approach of the waterfall methodologies, the agile approach focuses on agility and adaptability in development. This involves multiple iterative development schedules that seek to improve the output with every iteration.

“The design is not set in stone and is kept open to last minute changes due to iterative implementation. The team structure is cross functional, closely knit and self-organising. The design idea is never totally frozen or set in stone, but it’s allowed to evolve as new ideas come in with each release. Less importance is given to documentation and more to speed of delivering a working program.” (McCormick, 2012)

For a pictorial comparison, please see the figure below:

Figure 3: Waterfall versus agile models of development

Waterfall

Agile model

Source: adapted from California Department of Technology (2017), link.

The agile model is focused on iterative and continuous learning to enable teams to fail quickly, change tack, prototype, test and adapt on the go and provide effective solutions that give value for money. Clearly the evolution that has taken place in software development around agility is applicable to how we look at adaptability, learning and change in aid design and delivery.

Assessment framework – what does good look like?

At the beginning of this literature update we talked about six elements of organisational learning (clarity, connectivity, creation, capture, communication and challenge). Of these, perhaps the most useful in assessing what good looks like is the creation element. This identifies a number of stages: how data becomes information, how information becomes knowledge and how knowledge becomes know-how. This is somewhat similar to the stages in recognising a word, spelling the word, knowing the definition of the word, and using the word in a sentence.

To effectively assess the different stages of what good looks like in organisational learning, the NAO’s Financial Maturity Model (NAO, 2013) suggests a way forward. This model looks at different levels of maturity, from existing through functioning, enabling and challenging to optimising. For example, a level one department might have practices in place (what the model calls existing) but they are incomplete and inadequate, whereas a level five department could be expected to have leading-edge practices in place (what the model calls optimising). Of course not all departments should aim for level five, as this will depend on their requirements.

It is interesting and perhaps necessary to consider a similar framework to assess the maturity or stages of learning in delivering aid within and between government departments. It should be possible to create an assessment framework that aligns the stages of learning with the levels of maturity suggested in the NAO model. Such a framework would give some assurance that departments and funds are at the appropriate stages of learning depending on the complexity and size of their ODA spend.

Need for a supportive architecture

An ODA learning culture is strongly influenced by the overall key relationships, structures and processes of government within which it takes place. With this in mind it is useful to look at the changes in UK aid strategy and the architectural support that is necessary for learning to influence ODA spend across government.

A step change in ODA delivery

After the publication of the 2015 UK aid strategy (HM Treasury, 2015) there have been significant changes in how UK aid is delivered – a diversity of budgets and a fragmentation of responsibility. With this has come the recognition that different departments need to learn and share responsibility for ODA delivery in new ways.

Learning is a key dimension of ODA delivery that is dependent on the overall processes put in place. In light of the need for a supportive architecture, do the current systems and processes enable learning? As the literature suggests, there are no easy answers, but there are principles and approaches that can help to learn. What will be possible and effective is dependent on context, time and tasks.

Joining up government

Wiring it Up, published by the Cabinet Office, starts with the statement:

“This report addresses the concern that Whitehall works least well in dealing with issues that cut across departmental boundaries, such as tackling social exclusion, fostering small businesses or protecting the environment. (2000: 9, para 2.2)

In their article What works in Joined-Up Government? An Evidence Synthesis (2015), Carey and Crammond suggest that although a plan for coordination across departments has been on the government agenda since the late 1990s, it is now viewed as essential to the absolute core of government and public administration.

However, in the Institute for Government’s report Joining up Public Services around Local Citizen Needs (2015), Wilson et al. point out that although joined-up government has been heralded as the way to reduce duplication, make efficiency savings and improve public services, it is not easy to do. Significant barriers need to be overcome and up-front investments are needed before benefits are seen.

Elements of a supportive architecture

In conclusion to their synthesis, Carey and Crammond (2015) note that empirical studies have consistently suggested that the instruments (in themselves) used for collaboration are often inadequate or inappropriate for the context (Keast, 2011). This is due to a lack of strong supportive architecture (O’Flynn et al., 2011: 248). There is often a mismatch between the goals they aim to achieve, the mechanisms used to achieve them, and the level at which they are deployed (Keast, 2011).

O’Flynn et al. (2011: 253) warn that:

“without careful attention to, and investment in, creating (supportive) architecture, most attempts at joined-up government are doomed to fail as the power embedded in ways of doing things restrains innovation and undermines cooperation”.

The table below outlines the elements of a supportive architecture. Hard elements pertain to structure, while soft elements are aimed at creating cultural and institutional change.

| Hard elements | Soft elements |

|---|---|

| • a mandate for change • decentralised control • accountability and incentive mechanisms • dedicated resources (including flexibility in the way they are used at different levels). | • deliberate and strategic focus on collaboration • training and skills development • a call to action or a rallying point • information sharing. |

Carey and Crammond (2015) conclude that there is a need for multiple instruments and flexibility. Joined-up reforms need to be activating multiple leverage points but must also remain fluid and flexible. Joining up is a dynamic process in itself and, as it progresses, instruments and approaches need to be able to shift within it. For example, guidelines that support the creation of a new working group might in time become limiting, not allowing it to evolve. There needs to be a willingness to add, remove, or refine processes over time.

“There is a conundrum in any joined-up or cross-government working, which is the balance between the opportunity of benefitting from different views and new skills and expertise and the risks of strategic incoherence, duplication and other unintended consequences.” (International Development Committee, 2018: 35, para 13)

A report by Wilson et al. (2015) claims that the ten case studies they use demonstrate that although joined-up government can be hard, it can be achieved if the right building blocks are in place:

- using multi-disciplinary teams can focus attention on complex issues.

- agreeing on clear, outcomes-focused goals can help front-line organisations prioritise resources effectively.

- using evidence can build consensus and help to draw in resources from a range of organisations.

- building on existing programmes and structures can enhance existing good practice and partnerships on the ground.

- giving local areas greater flexibility can help local actors form the partnerships needed to deliver cross-cutting outcomes.

- balancing this with some central government support can provide the additional resources and political momentum needed to get an initiative off the ground.

- building the desire for joined-up services into the aims and processes of commissioning can incentivise organisations to collaborate.

- engaging a broad range of stakeholders throughout the design process can help to build buy-in and commitment to partnership working.

- sharing learning and experiences widely can help to ensure that effective models are built on.

- physically bringing organisations together can help to overcome entrenched cultural differences and data-sharing challenges.

It is also important to remember, as Benoit Guerin and colleagues at the Institute for Government point out:

“The public sector needs a system of accountability which is focused on improvement, not just blame. Studies by various ombudsmen have shown that the public care more about learning lessons than retribution. Similarly, multiple governments have stressed that the primary purpose of public inquiries is ‘preventing recurrence’, rather than attributing faults.” (Guerin et al., 2018: 32)

Summary

In this light-touch literature review we have concentrated on three key themes that address how UK aid learns. As noted in the introduction, these themes go beyond the specific focus on ODA but are readily applicable to this review. The themes are:

- An overview of learning in organisations – this provided an introduction to learning in ongoing change with an overview of organisational learning using six elements.

- Potential aspects of learning in aid-spending departments – this dealt more specifically with influences that organisations encounter in their learning journey. It included a brief introduction to the possibility of a self-diagnosis process using an assessment framework.

- The need for a supportive architecture for learning – this introduced the supportive context needed for learning to thrive in government.

The aim of this literature update has been to highlight the key influences of learning in the government’s ODA delivery, with a particular focus on the challenge of ensuring effective learning given the amount and rate of change that we currently face in the UK ODA ecosystem.

Bibliography

- Ackermann, E. (1996). “Perspective-taking and object construction: Two keys to learning”. In Kafai, Y. and Resnick, M. (eds) Constructionism in practice: Designing, thinking and learning in a digital world. Mahwah, NJ: Lawrence Erlbaum Associates, pp. 25-37, link.

- AlHaqwi, A.I. and Taha, W.S. (2015). “Promoting excellence in teaching and learning in clinical education”. Journal of Taibah University Medical Sciences 10(1), March, pp. 97-101, link.

- Argyris, C. (1991). “Teaching smart people how to learn”. Harvard Business Review 69(3), pp. 99–109.

- Baclig, J.T. (2015). “Life cycle of a nurse: clinical nursing experience”. Nursing News [online]. AMN Healthcare, link.

- Bellinger, G., Castro, D. and Mills, A. (2004). “Data, Information, Knowledge and Wisdom”. Systems Thinking [online], pp. 1-5, link.

- Cabinet Office (2000). “Wiring it up: Whitehall’s management of cross-cutting policies and service”. A performance and innovation unit report. London: Cabinet Office, January, link.

- California Department of Technology (2017). “Waterfall and agile – product development cycle”. Project Delivery Resources [online], 22 September, link.

- Capra, F. (2002). The hidden connections. London, UK: HarperCollins Publishers.

- Carey, G, and Crammond, B. (2015). “What works in joined-up government? An evidence synthesis”. International Journal of Public Administration 38(13-14), pp. 1020-1029, link.

- Civil Service Learning (2015). Your guide to learning in the workplace – version 2.2. Civil Service Learning, link.

- Cook, S.D.N. and Brown, J.S. (1999). “Bridging epistemologies: the generative dance between organizational knowledge and organizational knowing”. Organizational science 10(4), pp. 381-400, link.

- Derbyshire, K., and Donovan, E. (2016). Adaptive programming in practice: shared lessons from the DFID-funded LASER and SAVI programmes. UK Government, August, link.

- DeVries, R. (2000). “Vygotsky, Piaget, and education: a reciprocal assimilation of theories and educational practices”. New Ideas in Psychology 18(2-3): pp. 187-213, link.

- Devane, S., and Wilson, J. (2009). “Business benefits of non-managed knowledge”. Electronic Journal of Knowledge Management 7(1), pp. 31-40, link.

- Downs, A., Durant, R. and Carr, A.N. (2003). “Emergent strategy development for organisations”. Emergence: Complexity and Organization 5(2), pp. 5-28, link.

- Erikson, E. and Sampsa, S. (2012). “Decentralization, Social Networks, and Organizational Learning”. DRUID Working Papers 12-01, pp. 1-30, link.

- Guerin, B., McCrae, J., and Shepheard, M. (2018). Accountability in modern government: what are the issues?: A working paper. Institute for Government, April, link.

- Hamlin, S.C. (2012). An exploration of the themes which influence the flow of communications/information around an organisational structure. PhD in Organisational Science, University of Sheffield.

- HM Treasury and DFID (2015). UK aid: tackling global challenges in the national interest. UK Government, November, link.

- Ibarra, H., Kilduff, M. and Tsai, W. (2005). “Zooming In and Out: Connecting Individuals and Collectivities at the Frontiers of Organizational Network Research”. Organization Science 16(4), pp. 359-371, link. 21. ICAI (2014). How DFID Learns. ICAI, April,

- ICAI (2017). The cross-government Prosperity Fund: a rapid review. ICAI, February, link.

- ICAI (2017b). The Global Challenges Research Fund: a rapid review. ICAI, September, link.

- ICAI (2018). The Conflict, Stability and Security Fund’s aid spending: a performance review. ICAI, March, link.

- ICAI (2019). International Climate Finance: UK aid for low-carbon development: a performance review. ICAI, February, link.

- ICAI (2019b). The Newton Fund: a performance review. ICAI, June, link.

- InfoDev (2014). The Business Models of mLabs and mHubs — An Evaluation of infoDev’s Mobile Innovation Support Pilots. Washington, DC: World Bank, link.

- IDC (2018). Definition and administration of ODA Fifth Report of Session 2017-19. International Development Committee, House of Commons, HE 547, 5 June, link.

- Keast, R.L. (2011). “Joined-up governance in Australia : how the past can inform the future”. International Journal of Public Administration, 34(4), pp. 221-231, link.

- Kira, M. and Frieling, E. (2005). “Collective learning building on individual learning”. Discussion paper for the Fifth Annual Meeting of the European Chaos and Complexity in Organizations Network (ECCON, Netherlands, 21-22 October 2005. 8 September, pp. 1-12.

- Kolb, D.A. (1984). Experiential learning: experience as the source of learning and development. Englewood Cliffs, NJ: Prentice Hall.

- Lloyd, R. and Schatz, F. (2016). “Norwegian aid and adaptive programming”. Blog [online]. Itad, August, link.

- McCormick, M. (2012). Waterfall vs. agile methodology. MPSC Inc, link.

- McDermott, R. (1998). “Learning across teams: The role of communities of practice in team organizations”. Knowledge Management Review, May/June, pp. 1-8, link.

- Moore, K. (2011). “The Emergent Way: How to achieve meaningful growth in an era of flat growth”. Ivey Business Journal [online, no page numbers], issue November/December, link.

- Muoio, A. (1998). “Growing smart: unit of one”. Fast Company [online, no page numbers], 31 July, link.

- NAO (2013). Financial Management Maturity Model. National Audit Office, 20 February, link.

- NAO (2009). Helping Government Learn: Report by the Comptroller and Auditor General. National Audit Office, HC 129 Session 2008-2009, 27 February, link.

- IDC (2018). Definition and Administration of ODA. Final Report. International Development Committee, House of Commons, 5 June, link.

- O’Donnell, M. (2016). Adaptive management: what it means for CSOs. Bond, link.

- O’Flynn, J., Buick, F., Blackman, D. and Halligan, J. (2011). “You win some, you lose some: Experiments with joined-up government. International Journal of Public Administration, 34: 4, pp. 244–254, link.

- Phillips, K.F., Mathew, L., Aktan, N. (2017). “Clinical education and student satisfaction: An integrative literature review”. International Journal of Nursing Sciences 4(2), pp. 205-213, link.

- Quinton, S. and Smallbone, T. (2010). “Feeding forward: using feedback to promote student reflection and learning – a teaching model”. Innovations in Education and Teaching International 47(1), pp. 125-135, link.

- Roschelle, J. and Teasley, S.D. (1995). “Construction of shared knowledge in collaborative problem solving”. In O’Malley, C. (ed). Computer-supported collaborative learning. New York: Springer-Verlag, link. 45. Ramalingam, B., Wild, L. and Buffardi, A.L. (2019). “Making adaptive rigour work: Principles and practices for strengthening monitoring, evaluation and learning for adaptive management”. Briefing Paper. Overseas Development Institute, April,

- Royce, W.W. (1987). “Managing the development of large software systems: concepts and techniques”. In Proceedings of the 9th international conference on software engineering. Los Alamitos, CA: IEEE Computer Society Press, pp. 328-338.

- Senge, P.M. (2006). The fifth discipline: The art and practice of the learning organisation. London, UK: Random House.

- Steel, A. (2016). “The glue to managing change”. NAO blog, 4 October, link.

- Thiry, M. (2011). “Ambiguity management: the new frontier”. Paper presented at PMI® Global Congress 2011 – North America, Dallas, TX. Newtown Square, PA: Project Management Institute, link.

- Tsoukas, H. and Vladimirou, E. (2001). “What is Organizational Knowledge?” Journal of Management Studies 38(7), pp. 973-993, link.

- Tulloch, O. (2015). What does ‘adaptive programming’ mean in the health sector? Overseas Development Institute, link.

- Valters, C., Cummings, C. and Nixon, H. (2016). Putting learning at the centre: Adaptive development programming in practice, ODI Report. Overseas Development Institute, March, link.

- Vowles, P. (2016). “Adaptive development: great progress and some niggles”. Medium blog, 26 July, link.

- Wells, B. and Spinks, N. (1994). “Managing Your Grapevine: A Key to Quality Productivity”. Executive Development 7(2), pp. 24-27, link.

- Wilson, S., Davison, N., Clarke, M. and Casebourne, J. (2015). “Joining up public services around local, citizen needs: Perennial challenges and insights on how to tackle them”. Discussion Paper. Institute for Government, November, link.

- World Bank (2006). Implementation completion and results report: guidelines. OPCS, August 2006, last updated on 10/05/2011, link .

- World Bank (2018). Annual Report 2018: Ending poverty. Investing in opportunity. World Bank Group, link.

- IEG (2014). Learning and Results in the World Bank’s Operations: How the Bank learns: Evaluation 1. Independent Evaluation Group, World Bank Group. Washington: IBRD and World Bank, July, link.